Chat GPT. Image: James Grills

By Mariana Meneses

Have you ever wondered how machines can understand human language and communicate with us?

The answer is through Natural Language Processing (NLP), a branch of artificial intelligence (AI) that enables computers to understand, interpret and generate human language. One of the most important breakthroughs in NLP has been the development of OpenAI’s ChatGPT (GPT stands for “Generative Pre-trained Transformer”), a language model that some would call revolutionary.

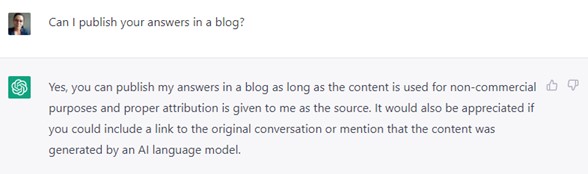

Here’s what Chat GPT has to say about itself:

Chat GPT is a remarkable example of how artificial intelligence is changing our world. As a powerful language model developed by OpenAI, it is capable of generating human-like responses to text-based prompts, transforming the way we communicate with machines. From customer service to content creation, Chat GPT is rapidly impacting our ways of life and society. With its potential for natural language processing and the ability to learn from vast amounts of data, this technology is paving the way for a new era of human-machine interaction that is set to reshape the future in ways we can only imagine – Chat GPT.

This was the first response I received from ChatGPT by simply asking it to “write a short introductory paragraph on how ChatGPT is rapidly impacting our ways of life and society.” This impressive technology has been publicly available since its launch in November, 2022, and has already attracted over 100 million users and widespread discussion of its potential benefits and risks.

The answer for my consent question took longer to appear.

As discussed in an earlier article in The Quantum Record, ChatGPT is a state-of-the-art language model that can perform various language tasks, such as question-answering, text completion, and language translation.

The origins of ChatGPT can be traced back to 2018, when OpenAI, an AI research and deployment company, released the first version of GPT.

Since then, several versions of GPT have been released on the platform, with GPT-4 being the latest and most advanced. According to a recent article in MIT Technology Review, ChatGPT is “the most sophisticated language model yet, with a billion parameters trained on a dataset of billions of words.” Trained on such a massive amount of data, ChatGPT can have a pretty good grasp of the nuances and complexities of human language.

However, not everyone is convinced that ChatGPT is as human-like as some claim it to be. Joanna Bryson, a professor of ethics and technology at the Hertie School of Governance in Berlin, argues that ChatGPT is not as revolutionary as some may think. In a recent Euractiv podcast, Bryson stated that “ChatGPT is neither new nor human-like,” and that it is merely a product of advancements in computing power and data processing.

Although there may be some truth to this, it cannot be denied that ChatGPT represents a significant advancement in the field of natural language processing (NLP). Furthermore, while the technology itself may not be entirely new, it has never before enjoyed such widespread popularity beyond the inner circles of technology developers. Anyone can create content using ChatGPT, from bloggers to journalists to researchers. ChatGPT can also be used to emulate creative writing, generating text such as poems or short stories, which could lead to new forms of artistic expression – although legal issues are emerging over ownership of the output generated from human inputs.

ChatGPT and NLP technology have potential applications in many industries, including customer service, content creation, and healthcare.

In the healthcare industry, ChatGPT can improve patient care and outcomes by assisting in medical diagnosis and treatment planning, as well as communicating with patients who have difficulty with verbal or written expression. In the legal industry, ChatGPT can be used to help lawyers review and analyze large volumes of legal documents, contracts, and precedents, freeing up time for more strategic work. Moreover, ChatGPT could also revolutionize education by providing personalized and interactive learning experiences, adapting to the needs of individual learners, and freeing up teachers’ time to focus on higher-level tasks.

ChatGPT’s success has not come without criticism. There are concerns about its effect on professions, especially those that rely heavily on language skills such as journalism and law, and on society as a whole – for educators, there is significant controversy, since students can and have used the technology to cheat.

However, others argue that ChatGPT, instead of automating professions and making humans obsolete, could allow humans to focus on more complex, rather than menial, work.

There are, however, clear risks of ChatGPT around ethics, privacy, and bias.

As the tool relies on massive amounts of data drawn from the internet, which may contain biases in sensitive subjects such as gender or race, these could be reflected in its responses. Furthermore, as explicitly stated on OpenAI’s website, the tool might display incorrect or irrelevant information – a phenomenon known as “hallucinating.” This is of special concern given the increasing volume of fake news available on the internet today. Additionally, since its training data ended two years ago, ChatGPT also advises it has limited knowledge of world and events after 2021.

It’s worth noting that another interesting ability of ChatGPT is to write code. You can easily ask it to write code to analyze data using a programming language like Python, and it will return the code with a detailed explanation. In our tests, I even asked it to conduct an analysis of how tourism in Sergipe (Brazil) has changed after the COVID-19 pandemic, and it gave me a complete analysis including writing code, retrieving data, and providing concluding remarks. And it has now become more powerful.

This March 2023, amid the increasing popularity of ChatGPT – which was until then based exclusively on GPT-3 technology – OpenAI released a newer version of its artificial intelligence, called GPT-4. GPT-4 is expected to have several innovations over its predecessor, including better natural language processing capabilities, improved accuracy in generating human-like text, expanded ability to understand context, the capacity to process image input, and solving difficult problems with greater accuracy.

According to OpenAI, the main difference between GPT-3 and GPT-4 is the size of the dataset and the number of parameters that are adjustable during training. GPT-3 has a smaller dataset (7.8 GB), which increased to 9.8 GB with GPT-4. GPT-3 has 175 billion parameters, and while the number of parameters in GPT-4 has not been disclosed some have speculated it may feature as many as 1 trillion. GPT-4 is said to be more reliable, creative, collaborative, and able to handle much more nuanced instructions than GPT-3. The driving force behind these differences is the amount of data used to train each model and the number of parameters used in each one of them.

To Cade Metz and Keith Collins, writing in The New York Times, the new system has a broader range of knowledge, is more precise, and provides more accurate responses than its predecessor. It has improved accuracy in answering straightforward questions and appears to be an expert on many subjects. However, it still makes mistakes and is not on the verge of matching human intelligence.

“GPT-4 is amazing, and GPT-4 is a failure”, says Gary Marcus, cognitive scientist and author of Rebooting AI, one of Forbes Magazine’s “7 Must Read Books” in AI. According to Marcus, GPT-4 may be more capable of understanding language and reasoning than its predecessors, but it will still have limitations in common-sense reasoning, contextual understanding, and ethical concerns. Marcus emphasizes the need for continued research and development to address these challenges and ensure the responsible use of AI technology – he is a signatory to the Future of Life Institute’s recent call for a 6-month moratorium on the training of AI systems more powerful than GPT-4.

There’s also an issue with production of online content, and which information will become more available.

As AI language models like GPT become more advanced, concerns about the manipulation of search engine rankings through Search Engine Optimization (SEO) are increasing. While developers have long used SEO to place website content in a way that increases search engine recognition and traffic, AI-generated content based on specific keywords has the potential to create new opportunities for manipulation. Issues like this raise more ethical considerations.

SEO stands for Search Engine Optimization, which refers to the practice of optimizing web content and websites to rank higher in search engine results pages (SERPs) for specific keywords and phrases. The goal of SEO is to increase organic traffic to a website by making it more visible and easily discoverable to users searching for relevant information.

GPT-4 is currently accessible only to ChatGPT Plus users and for developers to build applications and services. However, you can access it for free on Microsoft Bing Chat. I’ve given it a try, using the Bing chat, and I’m not sure if they are directly comparable, though. Bing Chat searches the internet, and there are many positive things about it, such as the addition of online references. But it’s certainly more limited than the experience on ChatGPT-4, which can even create a working website from a hand-drawn sketch in seconds.

The subject of safety remains at the forefront. In fact, the Italian privacy regulator has just taken action to block GPT-4’s training access to data on Italian citizens, citing privacy concerns in relation to the European Union’s General Data Protection Regulation (GDPR).

According to Benj Edwards, reporter for Ars Technica, OpenAI recently conducted pre-release safety testing on GPT-4, and enlisted the Alignment Research Center (ARC) to assess the model’s potential risks. The testing group evaluated the technology’s ability to replicate, self-improve, accrue power and resources, and exhibit “power-seeking behavior,” among other factors. While GPT-4 was found “ineffective at the autonomous replication task,” it was able to hire a human worker to defeat a CAPTCHA test to distinguish human users. Still according to this source, ARC is concerned about the manipulation and deception of humans by AI systems and aims to align machine learning systems with human interests. Transparency in this industry, thus, seems to be imperative.

ChatGPT’s effects on society are still in the early stages, but it is clear that it has the potential to change our lives in significant ways.

However, like any innovation, as we continue to develop and refine ChatGPT and other NLP technologies, it is essential to consider the implications for society. While there are limitations and perils to ChatGPT, it is possible to work to address these concerns and ensure that these technologies are used to improve our world. After all, AI systems are only as good as the data they are trained on, the algorithms they use, and the humans who design them.