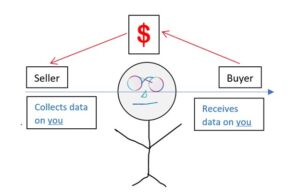

Data brokers collect both public and private data about you and me, and license it to third parties for a fee. We receive none of the revenue for the data we generate.

The business of data brokering has existed for over a decade and, according to some sources, the industry currently generates annual revenue of $200 billion. The low cost of data collection and storage, and increasing demand for data sets to train AI such as the recently-released GPT-4 designed to mimic human speech and thought, have fuelled the profits of the data brokerage industry.

The demand for data is high. Many businesses are emphasizing data analytics to find and target clients, increase sales, and maintain competitive advantage.

The leading players in the data brokerage industry include Acxiom, which advertises its ability to deliver data to marketers to target individuals based on “app usage, Bluetooth connections, location data, and more,” including groupings of keywords used in web searches. Other businesses with large data brokerage offerings include LexisNexis, credit reporting companies Experian and Equifax, and Oracle.

Your data can make you the target of marketers and law enforcement

Data purchases have, however, also been used for more than marketing. Data obtained from a broker can be used to violate individual privacy and destroy personal reputations. Wired Magazine reported that in July 2021, “a Catholic priest resigned from the church, after Catholic news site The Pillar outed him by purchasing location data from a data broker on his usage of Grindr. The incident didn’t just illustrate how people can wield Grindr data against members of the LGBTQ community. It also highlighted the dangers of the large, shadowy, and unregulated data brokerage industry selling Americans’ real-time locations to the highest bidder.”

Law enforcement is also a significant purchaser of data for suspect tracking, criminal enforcement, deportations, and other activities some of which are not subject to disclosure under warrants. The facial recognition company Clearview AI, which has assembled a database of over 30 billion images posted on LinkedIn, Facebook, and Twitter, sells data to law enforcement. Since the technology is trained primarily on faces of white people, facial recognition of minority populations is still notoriously unreliable and has led to claims of racism in law enforcement.

While the activity of data brokers can be subject to privacy legislation like the European Union’s General Data Protection Regulation (GDPR), depending on the jurisdictions in which they operate, the industry itself is not regulated. Nor do the activities of data brokers appear to be well-publicized, by themselves or others. Some, however, publicly promote their services; for example, Databroker offers a “blockchain-based, peer-to-peer marketplace for data,” and claims to be “home to an ever-growing number of data buyers and providers worldwide, all with one common goal: to create real business value from data.”

While legislation like GDPR can provide a measure of control to people like you and me who generate the data, for example by providing a right to demand deletion of our data, no provision is made to compensate people who are the source of the data. Data brokering reportedly generates significant profits for data sellers and marketers, and it will be interesting to see if laws begin to bring the source of the data into the economic model to reward us for the data we share, mostly without our knowledge.