In current public-key encryption cryptographic systems like the widely-used RSA, the process to synchronize keys between sender and receiver is in eminent danger from cyberattack using quantum algorithms. Image: thesslstore.com

By James Myers

We are in what is being called the Second Quantum Revolution.

Viable quantum computers could soon be running algorithms that will pose significant ethical and security issues. There will be serious risks to encrypted sensitive data, the exchange of public keys, and methods of authentication for governments, businesses, individuals, and financial transactions.

The quantum “cryptography crisis” underscores the crucial importance of securing the world’s data by transitioning from classical encryption methods to new cryptographic standards that are resistant to attacks using the rapidly developing technology. The question remains, however, how much time will we have to conquer a cryptography crisis? Will the new protocols for quantum-resistant cryptography be developed and tested before the quantum computer is perfected and becomes a practical reality?

Progress is being made in devising new methods to secure the world’s data. The problem as it now exists is in the math.

The looming crisis arises because the method now most commonly used to secure data is based on mathematical challenges, and the speed of the quantum computer will allow it to make the calculations to crack the codes far more quickly than today’s computers can. A number of recent proposals would employ a combination of geometry and mathematics for quantum-secure cryptography, and these are undergoing the process of evaluation and testing. Announcement of a new standard is expected within months.

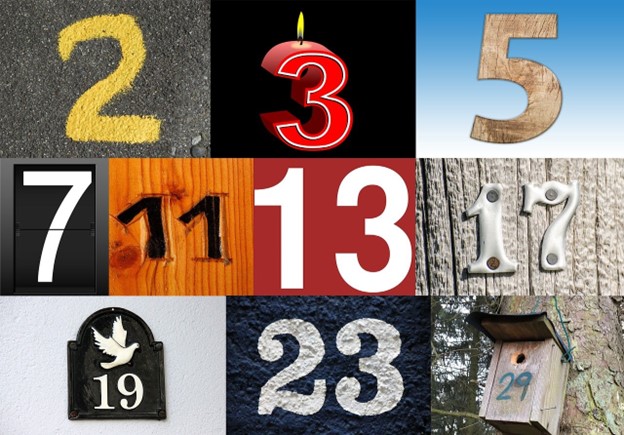

The fundamental theorem of arithmetic says that all numbers greater than one are the product of two or more prime numbers that are divisible only by one and themselves. Image: Pixabay

Existing cryptography is most widely based on prime number factoring, a time-consuming process that will reduce exponentially with the speed and accuracy of quantum computers.

Prime number factoring is a mathematical process that breaks a number into the product of two or more primes (prime numbers are divisible only by 1 and themselves), and the fundamental theorem of arithmetic says that every number greater than one can be derived by multiplying two or more prime numbers. Although a number like 1200 is relatively quickly broken down into its prime factors 2, 3, and 5 (as in 1200 = 24 ∙ 31∙ 52), factoring very large numbers is beyond the capacity of many present computers.

The numbers 30 and 15 are not prime, because they are divisible by more than 1 and themselves, but they can be broken down into the product of prime numbers 2, 3, and 5.

The discrete log problem is another current method for encryption that requires the use of elliptic curves and exponentiation with modular arithmetic.

In public-key encryption systems like the widely-used RSA, the encryption key is created by multiplying two large prime numbers, raising them to a predetermined exponent, and attaching an auxiliary number. While the encryption key is public and can be created by anyone using the algorithm, the decryption key is a secret and to break it would require the practically impossible task of factoring the product of the primes. To decrypt, the receiver’s device must synchronize with the values in the encryption, and the part of the encryption that contains the means to make the receiver’s key symmetric with the sender’s is in eminent danger from the quantum algorithms.

Existing cryptographic methods will be rendered obsolete in the post-quantum era when information will no longer be transmitted in single-state binary bits, as in today’s “classical” computers, but in their quantum computing analogs called qubits which can exist in multiple states simultaneously.

In a quantum computer operating with many more qubits than currently possible, Shor’s algorithm will use polynomial time – the vastly decreased time scale that applies to quantum signals – to factor the prime basis of numbers far more quickly. There’s a further concern that Grover’s algorithm, developed to search with high probability for the inputs to a quantum function’s outputs, could allow quantum computers to break 256- or 512-bit key cryptography used with most of today’s computers.

The solution for post-quantum cryptography (PQC) may lie in geometry.

In July 2022, the U.S. National Institute of Standards and Technology (NIST) announced the selection of four different possible PQC algorithms, with the goal of settling on not one but several that would provide security for quantum and existing “classical” computers.

The process has been open to public testing and comment, and this April NIST held its 5th annual PQC Standardization Conference where it announced an intention to release the first PQC standards this summer. When a standard is selected, governments, businesses, and other consumers will require a significant amount of effort and time to inventory existing systems and encryption methods, establish a transition plan for the new PQC protocols and decommission old technology, and finally implement and test. In the meantime, there are mounting concerns that cybercriminals are storing currently encrypted data expecting that they will be able to decrypt it later.

There have even been concerns that a quantum computer capable of decryption might be developed and operated in secret to exploit sensitive data, although last year RAND assessed the possibility as highly unlikely.

The spherical geometry of signal transmission between qubits in the quantum computer is particularly challenging for measurement, given the presence of the irrational and transcendental π in the ratio of circumference to diameter. Artistic impression from Pixabay

Over the past two years, testing and public review of the four algorithms selected by NIST for evaluation in 2022 have focused on their different structure.

Three of the four algorithms encode in a matrix structure – a matrix can be thought of as a series of dots forming a geometric lattice in multiple spatial dimensions. One of the four, however, uses a different method involving “hash functions,” which are like unique digital fingerprints created by complex mathematical operations, and although it is slower it promises an extra layer of security. Other possible cryptographic approaches continue to be explored.

Some matrix methods include “Learning With Errors” (LWE), which employs a mathematically and geometrically difficult process of discovering a hidden vector in a set of linear equations within a matrix in which small errors have been deliberately introduced. Also sometimes used is the shortest vector problem that requires determination of the least distance between points in a matrix.

Lattice-based cryptography: The tricky math of dots | Chalk Talk

Lattice-based cryptography appears to be gaining the general consensus for all-purpose security. More technical details on lattice-based cryptography are available in this pdf.

At its April 2024 conference, NIST announced that it had tested and recommends the use of three lattice-based encryption algorithms, and one algorithm that operates with hash functions.

The conference presentation explained the relative benefits and drawbacks of each of the four, with one of the lattice algorithms being recommended as the primary basis for encryption and the hash function algorithm recommended for additional security when its relative speed disadvantage can be tolerated. One of the lattice algorithms was rated as particularly complex to implement.

Quantum-secure encryption must guard against an incredibly high rate of attack. One conference presenter highlighted that an attacker would have to identify one out of a staggering 287 keys (the value is 27 digits long and is greater than a trillion trillion), and then execute 229 attack queries on the key (the exponent is one-third of that used by the attacker to find the key). NIST says the matrix structure of the recommended general-purpose algorithm offers a robust defence with a speed advantage against such onslaughts.

What else is on the horizon for PQC?

NIST is promising to deliver guidelines for transitioning to post-quantum encryption standards.

Some call the concerted and rigorous efforts that will be required to implement post-quantum security, in the myriad of systems that have proliferated over the digital age, “Y2Q,” after the “Y2K” problem at the turn of the millennium. The vast investment of labour and cost to prevent digital chaos at midnight on December 31, 1999 preoccupied the world in the years leading up to 2000, because until then computers had been designed to accommodate only two digits in dates, when four digits were required for algorithms to differentiate between the years 2000 and 1900.

NIST has expressed an interest in developing additional general-purpose processes that are not based on structured lattices.

There are some emerging alternatives, including one that was inspired by a paper posted on arxiv.org in 2021 by University of Texas Austin graduate student William Kretschmer, whose studies focus on quantum complexity theory. The approach uses a process to distinguish between two quantum states which proves exceptionally difficult because of the physics of the quantum. The quantum, which is the smallest bit of energy that can either cause change or be changed, can exist simultaneously in a combination of two states (“superposition”), and measuring a quantum destroys whatever combined state it happens to be in.

The particular challenge in the state discrimination approach is to identify the inputs for the output state that was destroyed during measurement.

Picturing the interaction of a quantum wave in superposition. Image: University of California Berkeley

Kretschmer’s state discrimination problem intrigued many researchers who have been collaborating in developing the potential cryptographic method.

A paper by one group of researchers, Fermi Ma, John Wright, and Alex Lombardi, established the method’s robustness even in the presence of an “oracle,” a term that computer scientists use to describe a hidden code that is applied to the input and acts in a way that appears random. While a single query of the oracle by an attacker would not result in decryption, it remains to be determined whether it is possible that the oracle could be consulted more than once in an attack.

In the meantime, the group’s paper is also significant because it connects the question with a major unresolved problem involving the distinction between oracles in quantum computing and today’s “classical” computing, posed in 2006 by Kretschmer’s PhD supervisor Scott Aaronson and mathematician Greg Kuperberg.

The PQC race is on.

No one knows when a fully functional quantum computer will be developed with sufficient power to overcome current encryption based on the mathematics of prime number factoring and the discrete log problem. The question rests on resolving a number of challenges in reducing the “noise,” or errors, prevalent in today’s quantum computer prototypes that prevent the stable connection of enough qubits to operate the code-cracking Shor’s and Grover’s algorithms.

As we have reported, however, major progress is being made in overcoming the quantum error correction problem. Additionally, rapid advances in materials science holds the tantalizing possibility of discovering superconductors that would eliminate noise and operate at room temperature without the prohibitively expensive supercooling or high pressure currently required to achieve superconductivity.

The race is on to secure the world’s data against cybercriminal attack using quantum algorithms. Image: Tumisu of Pixabay

Whatever the future holds for the progress of human ingenuity in perfecting the quantum computer, the safest bet would be that we will solve the problems sooner rather than later.

When it’s widely believed another decade might pass before quantum computers become fully operational, history demonstrates that we often underestimate our problem-solving creativity.

Given the time that will be required for implementation and testing, the current rapid pace of progress underscores the imperative of developing post-quantum cryptography methods as soon as humanly possible. In facing the “Y2Q” crisis, we can draw both hope and inspiration from the way the world rallied before the year 2000 to prevent digital chaos by defeating the millennium bug in computer dates.