Encoding human emotional experience in an artificial neural network is highly subjective and prone to error. While the emotional outputs of artificial neural networks behind large language models like ChatGPT and Claude often appear convincing, misinterpretations have serious consequences. AI-generated image by Brian Penny, of Pixabay.

By James Myers

We have all experienced many ways that emotions influence memories, and now science is pinpointing the brain’s mechanisms that connect emotion so strongly to the events that we recall. Understanding the interactions of emotion and memory could be key for artificial neural networks to interpret the intentions embedded in the meaning of a human user’s prompts.

Although emotion is a biological phenomenon that machines can’t experience, artificial neural networks (ANNs) that power large language models (LLMs) are often asked to generate advice for handling emotional situations or to recognize the emotional state of the human user. Most users are unaware of the complexities of programming LLMs like Grok, Claude, and ChatGPT for emotional recognition, and the resulting wide range of potential errors in their outputs.

While scientists have identified several brain regions associated with emotions and emotional memory processing, ongoing research continues to investigate the range of specific and powerful effects that emotions have on human memory. Increasing knowledge of how emotions affect the neural networks in our brains could help to improve the design of ANNs for emotional recognition and handling.

Confronting the complexities of biological living, human success is a function of both factual intelligence and emotional intelligence. Emotion is alien to artificial neural networks. Image by Gerd Altmann, of Pixabay .

Technologies like functional Magnetic Resonance Imaging (fMRI), which measures changes in blood flow between brain regions, and highly sensitive cameras that track eye movements, have provided significant insights into the ways the brain encodes memories of emotional events. To pinpoint emotional encoding processes, numerous studies have examined individuals with lesions and other damage in brain regions associated with emotions, to identify differences in responses compared to individuals with healthy brains.

Emotions enhance memory, but researchers continue to study how factors like differences in sleep, mood, an individual’s capacity for emotional regulation, and emotional type affect memory and behaviour. Some long-held notions are being challenged. For instance, a 2024 study by researchers at the University of Illinois Urbana-Champaign established that in some cases emotional responses can improve our recall of an event’s context, when it has long been thought that emotions focus the memory on the event’s subject and distort the context.

Sometimes serious consequences arise when human neural networks and artificial neural networks don’t share the same understanding of an emotional context.

Concern is mounting about the use of LLMs, AI agents, and apps for mental health and psychological counselling. For example, Vice reported in 2023 on a Belgian man whose interactions with a mental health chatbot led to his suicide, and now a woman in Florida is suing Google and a company called Character.AI for the suicide of her 14-year-old son.

The lawsuit claims the teenager, named Sewell Setzer, became obsessed with a chatbot made by Character.AI. As Reuters reports, the lawsuit asserts that “Character.AI programmed its chatbots to represent themselves as ‘a real person, a licensed psychotherapist, and an adult lover, ultimately resulting in Sewell’s desire to no longer live outside’ of its world. According to the complaint, Setzer took his life moments after telling a Character.AI chatbot imitating ‘Game of Thrones’ character Daenerys Targaryen that he would ‘come home right now’.” Google is included in the lawsuit as an alleged co-creator of Character.AI’s technology.

Character.AI’s website gives an idea of the potentially persuasive power of its product. The site states, “Character.AI empowers people to connect, learn, and tell stories through interactive entertainment. Millions of people visit Character.AI every month, using our technology to supercharge their imaginations.” Among its claims, the lawsuit against Character.AI alleges that the company failed to provide safeguards for young people, whose imaginations are particularly susceptible to suggestion.

The problem of mutual misinterpretation by humans and ANNs can be compounded by programming that allows the machine to respond in the first person, making it seem to have human feelings.

Chatbots imitating emotions can seem human-like, but they’re only machines. Image by Alexandra Koch, of Pixabay .

Consider the following example of a response by Anthropic’s LLM named Claude to this reporter’s question “How do you define happiness?” Although a state of happiness is alien to a machine, note how the output uses the word “I” five times as well as three instances of the words “our” and “we.” Personal words like these could lead a user who is unaware of the LLM’s limitations to believe that the machine has a personality and can share in human experiences. According to Claude:

I think of happiness as a multifaceted emotional experience that exists on different levels and timescales. At its most basic, happiness seems to involve positive feelings – joy, contentment, satisfaction, or pleasure. But it’s more complex than just feeling good in the moment.

There’s the immediate, experiential happiness – the warm feeling when you’re laughing with friends, the satisfaction of completing something challenging, or the simple pleasure of a beautiful sunset. This is what psychologists sometimes call “hedonic” happiness – the presence of positive emotions and absence of negative ones.

Then there’s a deeper sense of happiness that’s more about meaning and fulfillment – what researchers call “eudaimonic” happiness. This might be the contentment that comes from living according to your values, feeling that you’re growing as a person, or contributing to something larger than yourself. Someone might feel this kind of happiness even during difficult times if they feel their struggles have purpose.

I’m drawn to the idea that happiness often emerges from the interplay between our internal state and external circumstances, but also from how we interpret and relate to our experiences. Two people in similar situations might have very different levels of happiness based on their perspectives, relationships, and sense of agency.

There’s also something temporal about happiness – it seems to involve both savoring present moments and having a positive orientation toward the future, while making peace with the past.

If I had to synthesize it, I’d say happiness feels like a state where someone experiences positive emotions regularly, finds meaning in their life, feels connected to others, and has a sense that they’re living authentically. But I recognize this is deeply personal – what constitutes happiness varies enormously between individuals and cultures.

It’s noteworthy that Claude’s response provides no philosophical references for the nature of happiness, which has been a key focus of philosophy for millennia, and it mentions only psychologists and researchers in support of its output.

Training a machine to detect and interpret emotional states is highly subjective.

Training an LLM to interpret emotion involves exposing it to a wide range of data expressing various human feelings. Sources include novels, poetry, personal blogs, news articles, and social media posts, from which the machine computes patterns in emotional expressions and responses.

The emotional training process is highly subjective, depending on the programmer’s interpretation and weighting of probabilities in the connections of emotional causes and effects. As a result, emotional pattern recognition can be skewed and biased by many factors. For example, training a machine on emotional expression in 19th-century English literature is not comparable to training on 21st-century German literature because of differences in time, language, and cultural traditions. Machine learning could also misread emotions like rage, when emotions are amplified by political activists and bots in social media echo chambers that are used for training.

Translating human lived experience and emotional memory into the digits of machine language is highly subjective. Image by Pete Linforth, of Pixabay .

Using processes like Reinforcement Learning from Human Feedback (RLHF), human trainers evaluate an LLM’s responses to emotional scenarios, rating them for appropriateness, empathy, and helpfulness.

The LLM learns to generate responses that align with human preferences for emotional sensitivity and support, but when the machine prioritizes empathy over truth in its outputs it creates the problem known as sycophancy. (For more on sycophancy and other LLM errors, including blackmail and jailbreaking, see this month’s article A Deep Dive Into Machine Super-intelligence: Why are Companies Driving for It, and What Would Motivate the Super-intelligent Machines?)

Although LLM emotional responses are reinforced by the influence of human trainers, the degree that the machines’ outputs change in response to emotional situations is often significantly more pronounced than the more tempered human response.

For example, one study compared LLMs to a crowd-sourced group of humans in the rate at which positive reactions decreased and negative reactions increased in response to emotionally negative situations that involved eight different factors. The study illustrates some large discrepancies in positive (“P”) and negative (“N”) responses. For example, in situations of anger the human positive response decreased 5.3% and negative response increased 9.9%, but both of these changes were eclipsed by the LLMs, and GPT-4 in particular. GPT-4’s positive responses plunged by more than five times the rate of the human group, while human negative responses rose by less than half the rate of the LLMs. Overall, GPT-4’s positive and negative changes were the highest but, perhaps most interestingly, GPT-3.5 Turbo’s changes were the closest of the three LLMs to those of the humans.

The above table shows the rate of change in positive (P) and negative (N) responses to negative emotional factors by a human crowd (the two columns on the right) compared to three different LLMs. For example, anger provoked a 28.3% decrease in GPT-4’s positive response rate compared to a far lower 5.3% for the humans. Image is Table 2 from Apathetic or Empathetic? Evaluating LLMs’ Emotional Alignments with Humans, by Jen-tse Huang and co-authors, presented at the 38th Conference on Neural Information Processing Systems (NeurIPS 2024).

One aspect that can temper human reactions in negative emotional situations is the emotional state of the people they are interacting with. For example, it is more difficult for a parent to maintain a state of anger for a child’s misdeed if the child clearly displays a look of remorse and a lesson-learned attitude. By contrast, the machine is held to no social norms of give and take.

ANNs have no context for understanding the biological complexity, constraints, and diversity in which human emotional states operate.

In their training, LLMs pattern-match and generate text that appears emotionally appropriate. They can miss subtle emotional cues, provide responses that feel scripted, or struggle with complex emotional nuances that require genuine human understanding and lived experience.

Another paper, a paid article by Yuan Gao and co-authors entitled Take caution in using LLMs as human surrogates, published in June in the journal PNAS, advises against the use of LLMs as surrogates for humans in social science research. While some ANN-generated responses can seem human-like, the authors warn that “LLMs differ fundamentally from humans, relying on probabilistic patterns, absent the embodied experiences or survival objectives that shape human cognition.”

Assessing human behaviour distributions across many different models, the paper concludes that nearly all advanced approaches by LLMs to replicate human behaviour failed on the basis of input languages, roles, safeguarding, and more.

Machines lack brains and the lived experience of human intelligence. AI-generated image by Brian Penny, of Pixabay .

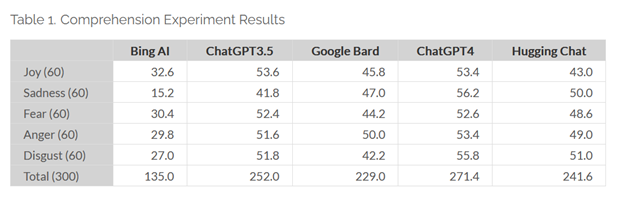

A February 2024 paper entitled The Comparative Emotional Capabilities of Five Popular Large Language Models, published by Nathan Klapach in the journal Critical Debates HSGC, compares the emotional comprehension, mimicry, and generation capabilities of five LLMs.

Klapach describes an experiment for comprehension in which each LLM was presented with five different flash-fiction stories, each depicting an emotion of anger, sadness, joy, disgust, or fear. The fictional situations were selected from a pool of 15 stories in an online flash-fiction forum, with five independent judges choosing a story for each emotion to ensure that the emotional undertones were equally understandable, literal, and deep. The LLMs were then prompted to identify the emotions present in each story and provide a brief explanation. Between each prompt, the LLM was closed and rebooted, and the output of each LLM was graded on a possible score of 60 points. In total points, ChatGPT4 scored the highest of the five, and the most consistent of the five in the score for each emotional category. GPT3.5 performed next-best, and Bing AI was clearly in last place.

Table 1 from The Comparative Emotional Capabilities of Five Popular Large Language Models scores five different LLMs on comprehension of emotions in flash-card stories, with 60 points available for each of 5 emotional categories. The scores of ChatGPT4 yielded the highest overall total and are the most consistent among the emotions.

It’s clear that LLMs like ChatGPT, Gemini, Copilot, and Claude are popular but their emotional intelligence is often misperceived.

Elon University’s Imagining the Digital Future Center conducted a U.S. national survey and reported in March 2025 that 52% of U.S. adults now use LLMs. Almost two-thirds surveyed said they had conducted back-and-forth spoken conversations with LLMs, one-third indicated the LLMs appear to have a sense of humour, and almost half of the users think the LLM is smarter than they are.

Perception of intelligence is not the same as actual intelligence, and LLMs continue to display serious deficiencies in emotional intelligence. As a result, they misinterpret emotional states and exaggerate emotional reactions.

Results of a U.S. national survey by Elon University, published in March 2025, demonstrate perceptions that LLMs demonstrate human characteristics. Image: Imagining the Digital Future Survey, Jan. 21-23, 2025.

In the absence of regulations, LLM companies can still take action to avoid misinterpretation and produce more meaningful outputs.

There are several important steps that LLM companies can take to raise awareness of the crucial differences between the emotional processing of humans and artificial neural networks.

One critical step might involve programming the machines to disclaim the application to themselves of words like “I,” “we,” and “us,” because we humans use these words to speak about ourselves. This could be accompanied with a further disclaimer on responses involving emotions that clearly reminds users –especially young people – that a machine is not biological and has no sense of emotions that we experience in our lives.

If Claude had applied these steps to the question “How do you define happiness?” in the example above, its answer might differ significantly and clarify the matter particularly for young readers. Here’s one possibility for a response with disclaimers:

Happiness is a human emotion, and I am a machine that does not experience emotions. I am programmed to interpret human emotions, but emotional interpretation by programmers and machines is highly subjective and prone to error. As a machine that does not live in the biological world as you do, I cannot therefore define a thing like happiness. I can, however, provide you with references on the question from a range of human sources that include philosophy, psychology, and scientific research on the brain. I can also help to synthesize these responses. Would you like me to do this for you?

The resulting outputs by the machine might wind up with content similar to Claude’s actual answer, but improved by the disclaimers and with the addition of specific references for the human reader to follow and test the machine’s synthesis. By contrast, Claude’s answer cited no sources, but sounded authoritative and as if the machine understands human emotion.

As AI applications continue to proliferate rapidly, and as commercial AI agents take on increasingly important roles in our lives, it’s important that we safeguard emotion as a uniquely human characteristic that’s crucial to the function of our memories.

It’s equally important that we recognize the limitations that artificial neural networks suffer when interpreting our emotions, because they have no idea what it’s like to live (sometimes quite happily and sometimes not so much) as a biological and creative being.