Deepfake images of Pope Francis circulate on the internet. It is often impossible to determine the source of such manufactured images.

By Mariana Meneses and James Myers

This article is part of The Quantum Record’s ongoing series on Quantum Ethics, with six issues introduced in our May 2024 feature Quantum Ethics: There’s No Time Like the Present to Plan for the Human Future With Quantum Technology.

Technology to create fake images and voice recordings is becoming more powerful and pervasive, bringing with it risks of blackmail, extortion, and emotional manipulation of viewers and listeners as well as the impersonated victims.

Quantum technology has the potential not only to add to the risks but also to decrease the possible damage by aiding in the detection of fakes.

The New York Times recently reported the ordeal of Sabrina Javellana, who at the age of 24 was a rising star in local politics when her images were stolen in 2021. The criminals who stole Javellana’s images manipulated them to create deepfake porn in an attempt to discredit her actions as deputy mayor of a Florida city.

Sabrina Javellana, from her profile on X.

“I felt like I didn’t have a choice in what happened to me or what happened to my body. I didn’t have any control over the one thing I’m in every day,” Javellana stated after the shock of discovering the attempt to discredit her with deepfake porn. Tools to create deepfake audio and images are often free and easily found on the web.

A deepfake is a technique that uses artificial intelligence to create realistic but fake videos or photos by altering sequences of pixels in original images, and fake audios by inserting into recordings words that were never spoken.

Deepfakes are often created with an intention to harm, for example to bring shame on individuals out of spite or revenge by placing them in fake pornography or to create an advantage by spreading false information about political opponents.

Can you spot the deepfake? How AI is threatening elections | CBC News: The National

“What if, during the next election, you see multiple videos that look and sound like the journalist you trust, telling you that the date of the election has changed. Would you believe it?” asks reporter Catharine Tunney in a CBC news broadcast.

“It doesn’t matter if you correct the records 12 hours later. The damage has been done,” says University of California, Berkeley professor Dr. Hany Farid, who specializes in digital forensics.

How does the technology behind deepfakes work?

As explained by Andersen Cheng, CEO of cybersecurity company Post-Quantum, machine learning algorithms are used to create an ‘autoencoder’ that “studies video clips to understand what a person looks like from multiple angles and the surrounding environment, and then it maps that person onto the individual by finding common features.”

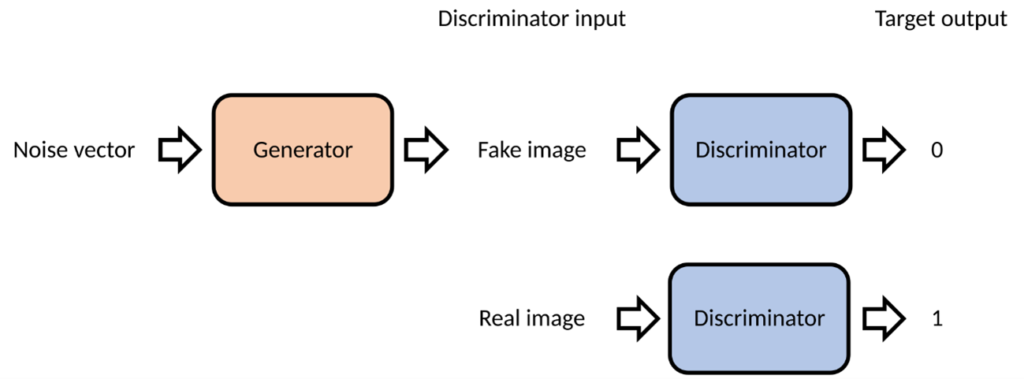

Drawing a large pool of data from many video clips, the autoencoder swaps in manufactured data to replace the original data. The fake data is then run through another type of machine learning algorithm called a General Adversarial Network (GAN), which detects and improves flaws in the manufactured images. The GAN uses a ‘discriminator’ to test the realism of the generated materials in an iterative process that continues to run until the discriminator cannot distinguish the fake from the real images.

Sora is a powerful new technology approaching realism.

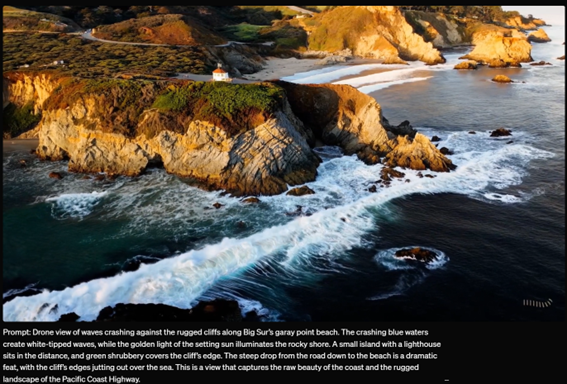

Sora is a text-to-video model from ChatGPT-maker OpenAI that transforms word prompts into video sequences. At its current state of development, Sora can generate up to one minute of high-quality visual content. Operating as a diffusion model, Sora begins with a static noise video and refines it through multiple steps to create coherent and visually appealing scenes. With applications in filmmaking, design, and creative content, Sora has the potential to influence how we create and experience visual media.

It could also potentially be used to create videos intended to deceive people, which OpenAI addresses on Sora’s homepage without providing specifics. The page’s section on safety states, “We are working with red teamers — domain experts in areas like misinformation, hateful content, and bias — who will be adversarially testing the model,” and that when Sora is available as a product, “our text classifier will check and reject text input prompts that are in violation of our usage policies, like those that request extreme violence, sexual content, hateful imagery, celebrity likeness, or the IP of others.” There is no indication of the process followed by the company to establish and update its usage policies.

The safety message concludes, “Despite extensive research and testing, we cannot predict all of the beneficial ways people will use our technology, nor all the ways people will abuse it.”

Here are three examples of realism from Sora’s webpage, where the moving images can be viewed in much higher resolution:

Manufactured images contain weaknesses.

In Sora’s webpage, OpenAI cites examples of errors that can be made by the AI model as its weaknesses. These include inaccurate physical modeling and unnatural object “morphing,” simulating complex interactions between objects and multiple characters, or the spontaneous appearance of animals or people, especially in scenes containing many elements.

Here’s an example of how Sora sometimes creates physically implausible motion:

Quantum holds potential for holography and use in generative AI.

According to Rod Trent, blogger and Senior Program Manager for Microsoft, quantum computing offers significant potential to advance generative AI models with Quantum Generative Adversarial Networks (QGANs).

Holography requires a large amount of data to operate over time in three spatial dimensions. As Trent writes, “Quantum computers can generate high-dimensional and high-fidelity data from a low-dimensional latent space, using quantum algorithms such as quantum amplitude estimation, quantum generative adversarial networks (QGANs), and quantum variational circuits.”

Quantum Generative Adversarial Networks is an emerging technology that combines quantum computing with the principles of GANs as described above. GANs are used in generative AI and are a powerful tool in classical machine learning for generating complex and high-dimensional distributions. QGANs offer the potential for accelerated training and enhanced generative models due to quantum parallelism, which is a fundamental property of quantum computers that allows them to perform multiple computations simultaneously by exploiting the quantum mechanical properties of superposition and entanglement.

Illustration of the operation of a GAN. Image: Mtanti on Wikipedia

QGANs could generate quantum states, molecular structures, or other quantum data, which may be particularly useful in fields like drug discovery where they could create molecular structures with desired properties for experimental development.

QGANs are a promising but early-stage technology with ongoing research focused on overcoming technical hurdles including quantum hardware scalability, integration with classical systems, and the interpretability of quantum models.

Trent highlights barriers such as high costs, ethical concerns, and security risks that hinder the widespread adoption of quantum computing. While widely available quantum computing could revolutionize generative AI and other fields, its risks include potential misuse and the emergence of new threats like quantum deepfakes.

Researchers from the University of Ottawa, the National Research Council of Canada, and Imperial College London have developed a quantum-inspired technique for creating more accurate and resilient holograms. Led by Dr. Benjamin Sussman, the team devised a method to record and reconstruct faint light beams, including single light particles (called photons), allowing for the three-dimensional imaging of remote, well-lit objects.

This technique offers significant advantages over traditional holography, such as resistance to mechanical instabilities and the ability to capture holograms over extended durations. The breakthrough, which leverages quantum imaging advancements and cutting-edge camera technology, could revolutionize 3D scene reconstruction with potential applications in fields like astronomy, nanotechnology, and quantum computing. It could also elevate the deepfake risks.

Hologram Zoo – Africa Experience Ad | Axiom Holographics

“Everyone is expecting the hologram revolution”, said the founder of Australia’s Hologram Zoo, Bruce Dell, to BBC.

Advancements in this area could make holograms more accessible and cost-effective, potentially transforming industries from entertainment to medicine and beyond.

The multiplying risks of deepfakes are difficult to contain.

Deepfake technology presents significant ethical challenges alongside its innovative potential. While it has legitimate uses in entertainment and communication, it also poses risks like misinformation, identity theft, and privacy violations. Deepfakes can manipulate public opinion, influence elections, and escalate international tensions.

The technology’s rapid evolution outpaces legal and regulatory frameworks, raising issues around consent, intellectual property, and defamation. Addressing these challenges requires new legal measures, ethical guidelines, and international cooperation to ensure responsible use and protect the integrity of information.

With the rise of AI tools like OpenAI’s ChatGPT first released in late 2022, and the use of open-source and other applications freely available to anyone with the interest and skill to use them, creating convincing deepfakes is becoming much easier.

Fake images and audio are no longer targeting only celebrities and politicians, but also businesses and everyday individuals. Social media platforms and governments are trying to combat this threat with new rules and technologies, but they have not been able to address the underlying problem and so the deepfakes continue to proliferate.

Banning deepfakes is difficult because the technology to create them is widely available and can’t be easily controlled.

The MIT Technology Review argues that while efforts like the US Federal Trade Commission’s rules against deepfakes and commitments from tech companies are helpful, they can’t solve everything. They state that while big tech companies try to block harmful deepfakes, many are generated from open-source systems or encrypted platforms which make them hard to trace. They conclude that effective regulation needs to address every part of the deepfake creation and distribution process, including smaller platforms like Hugging Face and GitHub, which provide access to AI models.

Adding watermarks and labels indicating that content is AI-generated can help to identify deepfakes, but they aren’t foolproof because bad actors can remove these markers. Ensuring all AI-generated content is watermarked is also challenging and might even make unmarked, harmful content seem more credible.

Before the European elections in June 2024, the EU asked major social media platforms like Facebook and TikTok to take action against AI-generated deepfakes.

The European Commission issued guidelines for platforms to label AI-created content clearly and reduce the spread of misleading or harmful information. The guidelines aimed to prevent manipulation and misinformation, particularly from sources like reportedly Russian state-sponsored disinformation campaigns. Social media and other public platforms were also urged to highlight official election information and improve measures to protect the integrity of the electoral process.

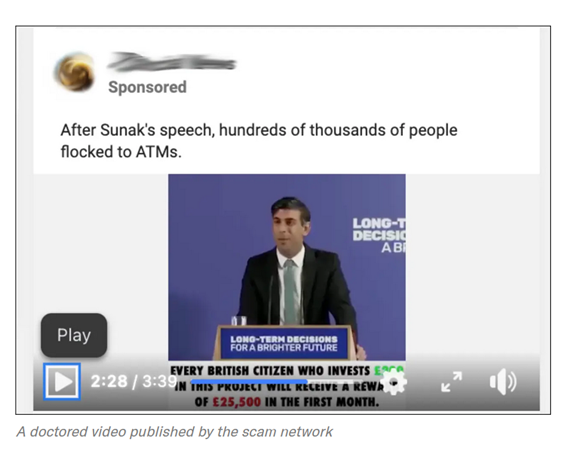

The Bureau of Investigative Journalism published this example of a deepfake video that resulted in financial loss to those who fell for the scam.

The Bureau of Investigative Journalism has uncovered a sophisticated deepfake scam network that exploited doctored videos of prominent figures such as current and former U.K. Prime Ministers Keir Starmer and Rishi Sunak to promote a fraudulent investment scheme called Quantum AI.

Between 2022 and early 2024, these manipulated videos circulated on Facebook, Instagram, TikTok, YouTube, and X, misleading viewers into investing money. Despite efforts by Meta, Google, and TikTok to remove the content and enforce policies, the scam reached millions globally, causing significant financial losses. The campaign’s scale and persistence raise serious concerns about the effectiveness of tech platforms in combating misinformation, especially during critical election periods.

According to the World Economic Forum, disinformation is a major global risk in 2024, with deepfakes being a particularly concerning use of AI in increasingly immersive online environments.

The WEF argues that a multi-layered approach is needed: using technology to detect fake content, creating policies to regulate AI, raising public awareness, and adopting a zero-trust mindset of scepticism and verification. AI-based detection tools can help to find inconsistencies in media, while policies like the EU’s new AI Act and U.S. President Biden’s Executive Order on AI aim to ensure accountability. Enhanced public education will help people to recognize and question fake content.

Defending Authenticity in a Digital World | DARPAtv

The Semantic Forensics (SemaFor) program of DARPA, the U.S. Government’s Defense Advanced Research Projects Agency, has developed numerous tools now ready for commercialization that will detect and characterize deepfakes.

To support this transition, DARPA is launching two initiatives: an analytic catalog of open-source resources, and the AI Forensics Open Research Challenge Evaluation (AI FORCE). The catalog will provide researchers and industry with tools developed under SemaFor, while AI FORCE will encourage the creation of machine learning models to detect synthetic AI-generated images.

DARPA aims to involve the commercial sector, media organizations, researchers, and policymakers in combating the threat of manipulated media by leveraging these investments.

While quantum technology could be used to create deepfakes, it can also significantly advance the battle against deception in several ways.

Quantum computers could enhance the detection of fake videos and audio with algorithms such as quantum neural networks (QNNs) that “have a great potential for tackling the issue of the classification of bona fide or synthetic images, audio, and videos.” Additionally, quantum applications in cybersecurity, such as quantum-resistant cryptography, could strengthen defenses against attacks involving deepfakes. Furthermore, quantum research might improve our understanding of deepfake creation by revealing insights into the machine learning principles used in generating these deceptive media.

While quantum technology does not address deepfakes at their source, its potential contributions lie in enhancing detection, defense, and comprehension of this issue.

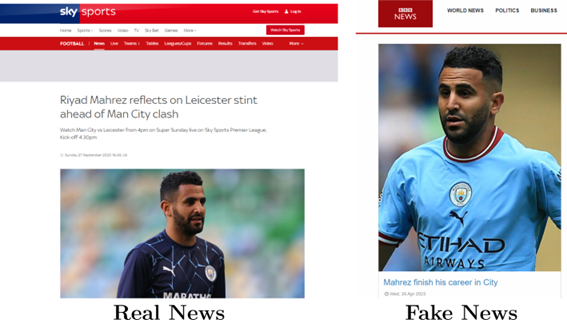

Comparison of real and fake news from the February 2024 study Deepfakes: Current and Future Trends.

In a recently published article in the journal Artificial Intelligence Review, Dr. Ángel Fernández Gambín and co-authors offer a detailed examination of deepfakes, covering their technology, consequences, and future research directions.

They define deepfakes as synthetic media created using deep learning techniques that can produce realistic images, videos, and audio. They highlight the legal and ethical issues, particularly regarding data privacy under The General Data Protection Regulation (GDPR), and stress the need for better data anonymization and privacy safeguards.

GDPR is a major data protection law implemented by the European Union in May 2018 that governs the collection, processing, storage, and sharing of personal data of EU citizens and residents. It grants individuals rights to access, correct, and request the deletion of their data, requires organizations to obtain clear consent before processing personal data, and mandates prompt notification of data breaches to both authorities and affected individuals. GDPR also imposes substantial fines for non-compliance (GDPR – EU)

The authors point out that detecting deepfakes is challenging due to rapid advancements in creation techniques, and they call for improved authentication methods to verify the origin of content. The paper notes the significant role of social media in spreading deepfakes, especially among users with specific ideological biases, and suggests future research should focus on user behavior and enhanced detection methods. They also explore the potential of technologies like blockchain for improving cybersecurity and combating digital deception.

Deception and impersonation are practices that have persisted through millennia of human history, and are now amplified by technology with the creation of deepfake video and audio.

As long as the financial and emotional incentives continue to reward the deceivers, the practices will continue. It remains to be seen whether emerging quantum technologies will help or hinder – or both – the goal of reducing the crime and protecting the public.

Craving more information? Check out these recommended TQR articles:

- Race for Post-Quantum Cryptography: Will Proposed Encryption Standards Secure the World’s Data?

- What Will a Recent Quantum Leap in Time Crystal Technology Reveal About the Elusive Nature of Time?

- Breakthrough in Error Correction Opens Potential for Large-Scale Quantum Computer Processing

- The Future of Quantum Computing Accelerates, Many Qubits at a Time

- The Incredible Power of Shape: Fractals Connect Quantum Computers to the Human Body and the Cosmos