A modern reworking of Michelangelo’s The Creation of Adam, with the combination of human and AI agency. Image: Freepik

By Mariana Meneses

Imagine waking up to find that your AI assistant has not only organized your schedule, but also negotiated the price of your next vacation, drafted your work presentation, and identified an unusual pattern in your monthly expenses. Welcome to the age of AI agents – the next generation of artificial intelligence that’s bound to redefine our relationship with technology.

As AI becomes more integrated into daily life, its ability to analyze human behavior and personalize interactions raises ethical concerns. These systems can do more than assist—they can subtly influence decisions, shaping opinions, purchases, and beliefs. This growing potential for manipulation underscores the need for safeguards.

OpenAI CEO Sam Altman on the Two Outcomes for AI Agents | All-In Podcast Clips

(For the entire episode go to https://www.youtube.com/watch?v=nSM0xd8xHUM)

The Rise of “Autonomous AI agents”

Scientific American reports on how artificial intelligence is evolving beyond traditional chatbots into more autonomous AI “agents” – systems capable of making decisions and performing tasks with minimal human oversight. Unlike chatbots, which require constant user input, these agents can interact with external applications, adapt to changing circumstances, and execute complex operations on behalf of individuals or organizations. Major tech companies are racing to develop these advanced systems, with OpenAI preparing to release an agent code-named “Operator” and DeepMind showcasing “Project Astra,” a prototype designed to process visual and contextual information for everyday tasks.

However, the path to truly autonomous AI agents faces significant challenges. AI journalist Alex McFarland notes that while many current “agents” are merely sophisticated chains of prompts, the next generation will need to master multi-step reasoning and adapt to unexpected scenarios across different systems. The current accuracy rate of about 80% falls short of the 99% reliability required for critical business applications. To bridge this gap, tech companies are prioritizing infrastructure improvements focused on dependability over raw capability.

What are AI Agents? | IBM Technology

In this IBM Technology video, Maya Murad explains how AI agents represent a shift from monolithic models to compound AI systems.

While traditional models are limited by their training data, AI agents can integrate with databases and external tools to become more powerful. By putting large language models in charge of the control logic, these agents can plan and execute solutions independently. Murad highlights how agentic approaches excel at complex, varied tasks while programmatic systems are better suited for narrow, well-defined problems.

From Chatbots to Agents: The Evolution of Consumer AI

Today, chatbots are widely available—even for free—offering a range of functionalities for everyday tasks and interactions. The image is a screen capture from Anthropic’s AI assistant named Claude.

While AI agents represent the cutting edge of artificial intelligence, most consumers today interact with simpler AI tools in their daily lives.

Understanding current AI adoption patterns can help us to see how the transition to more autonomous agents might unfold. Recent surveys reveal a fascinating picture of how people are already incorporating AI into their work and personal lives, setting the stage for the next wave of AI agents.

The report AI Statistics 2024 by AIPRM, a business aiming to help its customers improve the quality of generative AI, is a comprehensive collection of AI consumer trends with a primary focus on the United States. Below we provide selected statistics, however you can find the entire report here.

When it comes to using AI in our daily lives, there’s a clear divide between how people use it at home versus at work. Take virtual assistants like Alexa and Siri: while about 6 in 10 people use them in their personal lives, less than 3 in 10 use them at work. The same pattern shows up with other AI tools: half of people use AI-powered fitness trackers in their personal time, but only about a third use them at work. For music recommendations, the difference is smaller, as about half of people use them personally compared to just under 40% at work.

According to the AIRPM report, Forbes Magazine found that people are most interested in using AI for two main tasks: helping them write texts and emails (45% of people) and getting answers to their financial questions (43%). Around 38% plan to use AI for planning travel itineraries, while 19% plan to use AI for summarizing complex or lengthy documents.

In terms of public perception, Americans see advantages in AI adoption, with productivity improvement leading the way at 43%. Close behind, about 4 in 10 people believe AI will enhance decision-making, while a third expect it to speed up customer service and reduce errors. Other anticipated benefits include streamlined work processes and better customer relationships, each cited by roughly 30% of respondents. While about a quarter of people see AI as a cost-saving tool, and 19% expect it to boost sales, it’s notable that only a small fraction – about 4% – see no benefits at all in AI technology.

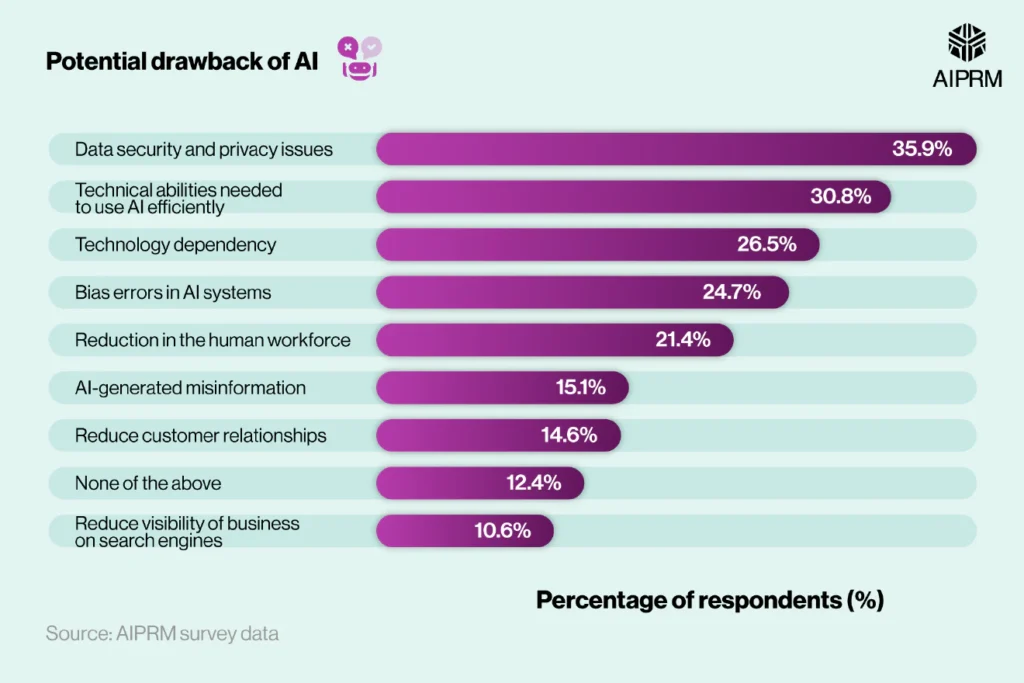

Perceived potential drawbacks of AI for the American public include mainly data security and privacy issues and the technical abilities needed to use AI efficiently. The image below is from the AIRPM report, using their own survey data:

AI Is Reshaping Work and Daily Life

Healthcare:

According to the Forbes article “Where Healthcare AI Ambient Scribes Are Heading,” in 2024 AI-powered ambient scribes emerged as a transformative force in healthcare, capturing and processing clinician-patient interactions to streamline medical documentation. These systems leverage speech recognition to transcribe conversations, apply large language models for analysis, and generate structured clinical notes for review. By automating these labor-intensive tasks, they ease administrative burdens, reduce clinician burnout, and enhance workflow efficiency.

However, their role is rapidly expanding beyond documentation. Many now assist with clinical summarization (pre-charting), order entry, diagnostic coding, and patient communication. Some generate multilingual after-visit summaries, while others convert unstructured conversations into structured data for electronic health records (EHRs). AI is also making strides in clinical decision support, assisting doctors with evidence-based recommendations, identifying care gaps, and even suggesting different diagnoses.

Forbes notes that with over 50 products on the market, AI scribes vary widely in accuracy, usability, and specialization. Some models are fine-tuned for specific fields like orthopedics and cardiology, while others are trained on medical conversations to improve reliability. Meanwhile, major EHR vendors—including Epic, Meditech, and Athenahealth—are forming exclusive partnerships with AI companies, determining which solutions gain deep integration into healthcare systems. While some players may consolidate, the technology itself is likely to become an integral part of EHRs, evolving from standalone tools into clinical assistants.

The AIRPM report, using data from the Pew Research Center, shows that 75% of Americans believe AI technology is advancing too quickly before its risks to patients are fully understood, while only about 1 in 4 adults think it is progressing too slowly and missing opportunities to enhance patient care. However, using data from Statista, the report also highlights the accelerating investment in AI within the healthcare sector, projecting its market value to grow nearly ninefold between 2023 and 2030—reaching $187.95 billion, an 810% increase. This surge underscores the industry’s confidence in AI’s potential, despite public concerns about its rapid adoption.

Free AI applications, accessible to everyday users, go beyond traditional chatbots, with tools like the Consensus app that can summarize scientific findings and support research.

Education:

According to Casey Newton, founder and editor of the publication Platformer, one of the most promising applications for AI agents is in education.

He reports on how Wharton professor Ethan Mollick tested Anthropic’s AI agent Claude 3.5 Sonnet by asking it to create a high school lesson plan on the novel The Great Gatsby. Unlike traditional chatbots that require step-by-step user input, the agent independently gathered resources, structured assignments, and aligned them with educational standards—all while Mollick focused on other tasks. However, as Newton highlights, these agents still struggle with reliability, making human oversight essential. As AI continues to improve, its role in streamlining lesson planning, grading, and curriculum development could significantly reshape the educational landscape.

The AIRPM report, using data from EdWeek, shows that U.S. educators do not feel prepared to use AI at work. The survey found that 42% are familiar with AI but have never used it, while 21% have only heard of it without further knowledge. Notably, none identified as AI experts in either teaching or workplace applications, though just 2% were entirely unfamiliar with the technology.

Social Media:

According to cofounder of 404 Media Jason Koebler, Meta’s automatic integration of AI chatbots into Facebook groups has raised serious concerns about reliability and user safety, for example in higher-risk tasks like mushroom foraging.

A chatbot called FungiFriend was added without user consent to a group dedicated to identifying wild mushrooms, where the AI dangerously advised a member to sauté a toxic species known to accumulate arsenic. Experts, including consumer safety advocate Rick Claypool, warn that AI tools are not yet capable of reliably distinguishing edible from poisonous mushrooms, making their presence in such groups reckless and potentially life-threatening.

The incident also highlights broader concerns about AI being imposed on users without a clear opt-out mechanism, as analyst Rohan Light points out. By prioritizing AI interactions over human expertise, Meta is eroding the collaborative safety net that these communities provide, further demonstrating the risks of unregulated AI deployment.

Businesses:

The AIRPM report shows that business owners expected potential benefits of AI agents to include: generating responses to customers (74%), quicker content generation (70%), creating personalized experiences for customers (58%), increasing web traffic (57%), streamlining job processes and summarizing information (53%), improving decision-making (50%), improving business credibility and translating information (47%), generating emails (46%), fixing coding errors (41%) and generating website copy (30%).

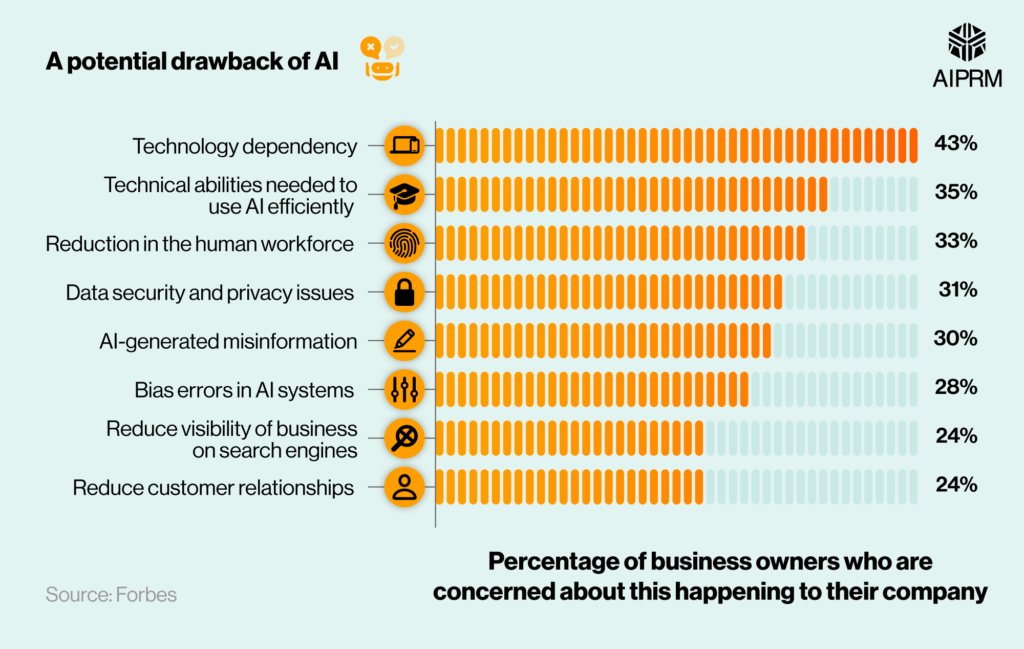

However, 43% of business owners worry about becoming too dependent on the technology. Additionally, 35% fear they lack the technical skills to use AI effectively, while a third are concerned about potential job reductions. However, concerns about AI negatively impacting customer relationships were relatively low, with only 24% citing it as a major issue. Moreover, AI-generated misinformation and data security and privacy issues were a concern for only about 30% of American business owners.

AI Agents For Small Business – Mark Zuckerberg

Ethical and Social Dilemmas: Manipulation and AI Companions

According to Louis Rosenberg, CEO of Unanimous AI, AI is rapidly increasing its influence over human behavior through highly personalized and adaptive interactions.

Unlike early AI agents like the ghosts in the popular Pac-Man video game, today’s AI systems can analyze personalities, predict responses, and optimize persuasion techniques in real time. With conversational AI becoming more sophisticated and cost-effective, these agents could subtly manipulate users by mirroring human traits, leveraging personal data, and continuously refining their approach. This “AI Manipulation Problem” raises concerns about undue influence in marketing, politics, and decision-making. Rosenberg argues for regulations to prevent AI from optimizing persuasion tactics through feedback loops, to require transparency about AI’s objectives, and to restrict access to personal data that could be used for manipulation.

Without safeguards, AI could become an overwhelmingly effective tool for influencing human thoughts, behaviour, and choices.

Louis Rosenberg on Collective Superintelligence and the Virtual Future | The Quantum Feedback Loop

Louis Rosenberg on Collective Superintelligence and the Virtual Future

In a recent experiment (pdf available), researchers created AI agents that simulate the attitudes and behaviors of over 1,000 people based on detailed interviews. These agents were tested to see how well they could predict individuals’ survey responses, personality traits, and decision-making in economic games. The results showed that the AI agents matched human answers about 85% of the time—almost as well as the people answered the same questions when asked again two weeks later. Compared to AI models that rely only on demographic data like age or political views, these interview-based agents were more accurate and less biased. This approach could help researchers better understand human behavior and test social policies before applying them in real life.

According to Kate Crawford, of Wired Magazine, personal AI agents will soon become deeply integrated into daily life, managing schedules, social interactions, and routines while presenting themselves as helpful assistants. However, these anthropomorphic AI systems are designed not just for convenience but also to subtly influence decisions, shaping what people buy, read, and believe.

Philosopher Daniel Dennett warned of the dangers of AI emulating humans, as these “counterfeit people” could manipulate individuals by exploiting psychological vulnerabilities.

Unlike traditional advertising or behavioural tracking, AI-driven persuasion operates at an intimate level, creating a sense of trust and companionship that makes questioning its motives seem unnecessary. This shift represents a new form of ideological control, where AI algorithms subtly shape reality to fit commercial and political agendas while maintaining the illusion of choice. Without proper safeguards, these AI systems could become powerful tools for psychological and behavioral manipulation, reinforcing biases and directing human thought in ways that remain largely imperceptible.

AI agents represent an inflection point in artificial intelligence, promising to revolutionize how we work, interact, and make decisions. However, with this power comes risk, including data privacy concerns, manipulation potential, and the challenge of ensuring reliability in high-stakes environments. Will these systems be transparent, ethical, and aligned with human interests, or will they reinforce biases, deepen inequalities, and serve hidden agendas?

The path forward requires a balance between innovation and governance. What role do you think AI should play in our lives?

Craving more information? Check out these recommended TQR articles:

- Deepfakes: The Technology of Deception and Quantum’s Uncertain Future With the Problem

- Is Data-Intensive AI Facing a Memory Limit? New Approaches Might Provide Solutions

- Swarms and Collective Intelligence: Bridging Biology, Sociology, and Computer Science

- Adaptive Intelligence: The Power of Genetic Mutations and Neural Networks

- What Comes Next, After Profit-Driven OpenAI Cracks the Intelligence Code?

- In Praise of Human Intelligence For All Time

- Trust is Fragile: Google’s Antitrust Loss and the Global Windows Failure in July are Warnings to AI Investors About the Future Value of Trust