One of OpenAI CEO Sam Altman’s international appearances in 2023.

By James Myers

The human superpowers of biology and imagination are so transformative that the reasons “why” we do something are often far more complex than “how” we do it.

The potential power and economic value of Artificial General Intelligence should place a spotlight on the question “Why?” so many are now seeking to develop AGI.

We’ll focus on one human in the quest for AGI who dominates the news lately: Sam Altman, CEO of OpenAI, the company that introduced generative AI to the world in November 2022 with ChatGPT. Since then, Sam’s technology has been at the centre of intense debate about its merits, problems, and future, while Sam has become a celebrity commanding the attention of world leaders.

OpenAI CEO Sam Altman signs a partnership agreement to expand the use of generative AI in the United Arab Emirates and other regions. The New York Times reported on connections between the UAE’s G42 and a foreign superpower, raising the possibility of foreign manipulation of generative AI users. Image: Gary Marcus

Before asking “Why AGI?” we need to ask “What is AGI?”

OpenAI’s website declares: “Our mission is to ensure that artificial general intelligence—AI systems that are generally smarter than humans—benefits all of humanity.”

This begs the questions: how can a human program a machine to be smarter than humans, how can the machine avoid repeating human errors in its programming, and what does “smart” mean in the context of biological, thinking human beings?

Humans make programming errors, like the mistake that caused a fatal 2018 Tesla crash from autopilot malfunction. Image: Los Angeles Times (Associated Press)

Setting aside these questions, OpenAI’s is only one definition of AGI. Search the term and you’ll find a myriad of definitions. They all relate, in some form, to the human processes of thinking and learning – which the AGI-seekers (and the rest of us) are far from fully understanding. There’s inherent risk in attempting to replicate, and amplify, something that’s beyond our own knowledge, especially when our knowledge is far from perfect to begin with.

To illustrate the risk, consider OpenAI’s definition of AGI in its 2018 Charter: “highly autonomous systems that outperform humans at most economically valuable work.” In 2018, the mission was to develop AGI as an economic superpower; now, six years later, the goal is to outsmart humans. What will the goal of AGI be tomorrow, who will define it, and who will manage the associated risks?

Our Gratitude to Sam Altman

We should be grateful to Sam for acknowledging the risks of AGI that he and many others seek.

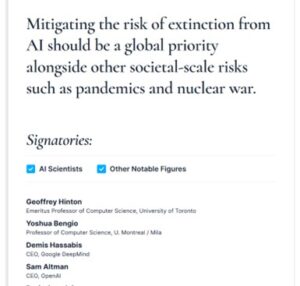

Center for AI Safety statement on AI risk signed by Sam Altman, among many other notables.

Sam’s February 24, 2023 blog states: “AGI has the potential to give everyone incredible new capabilities; we can imagine a world where all of us have access to help with almost any cognitive task, providing a great force multiplier for human ingenuity and creativity. On the other hand, AGI would also come with serious risk of misuse, drastic accidents, and societal disruption. Because the upside of AGI is so great, we do not believe it is possible or desirable for society to stop its development forever; instead, society and the developers of AGI have to figure out how to get it right.”

Sam’s blog wisely goes on to warn: “A misaligned superintelligent AGI could cause grievous harm to the world; an autocratic regime with a decisive superintelligence lead could do that too.”

We owe Sam gratitude for warning users of the risks of his large language model technology, which is seen as a precursor to AGI. He has issued warnings repeatedly in interviews, and as OpenAI’s website acknowledges,“GPT-4 still has many known limitations that we are working to address, such as social biases, hallucinations, and adversarial prompts.”

Unfortunately, not all users see or heed warnings, including a number of lawyers who suspended critical thinking when they submitted AI-generated court briefings containing hallucinations about non-existent case precedents. Humans like to take short cuts, sometimes without looking far enough ahead to see that the short path isn’t always the best path.

We should be very grateful to Sam for giving us a taste of a precursor to AGI, ChatGPT, while there’s still time to change the path to AGI. Sam was quite intentional in this, and it’s to his credit that he wants to help the world to prepare and adapt. As he wrote in his February blog:

“There are several things we think are important to do now to prepare for AGI.

“First, as we create successively more powerful systems, we want to deploy them and gain experience with operating them in the real world. We believe this is the best way to carefully steward AGI into existence—a gradual transition to a world with AGI is better than a sudden one. We expect powerful AI to make the rate of progress in the world much faster, and we think it’s better to adjust to this incrementally.

“A gradual transition gives people, policymakers, and institutions time to understand what’s happening, personally experience the benefits and downsides of these systems, adapt our economy, and to put regulation in place. It also allows for society and AI to co-evolve, and for people collectively to figure out what they want while the stakes are relatively low.”

We should demonstrate gratitude for Sam’s call for strict international governance of superintelligent AI. As he and co-authors wrote in a May 22, 2023 blog: “we are likely to eventually need something like an IAEA [International Atomic Energy Agency] for superintelligence efforts; any effort above a certain capability (or resources like compute) threshold will need to be subject to an international authority that can inspect systems, require audits, test for compliance with safety standards, place restrictions on degrees of deployment and levels of security, etc.”

For the past 66 years, the IAEA has helped to safeguard the world from nuclear war, and an agency of that calibre to oversee AGI – if the troubled state of international cooperation could even contemplate it – would offer much reassurance that dangers can be anticipated and mitigated.

Lex Fridman interviews Sam Altman and asks, at 1:30:37, “Do you anticipate, do you worry about pressures from outside sources? From society, from politicians, from money sources?” Sam’s reply: “I both worry about it and want it. Like, you know, to the point of we’re in this bubble, and we shouldn’t make all these decisions. Like, we want society to have a high degree of input here.”

We can be grateful that Sam established OpenAI as a non-profit organization. As his February 2023 blog states: “We have a nonprofit that governs us and lets us operate for the good of humanity (and can override any for-profit interests), including letting us do things like cancel our equity obligations to shareholders if needed for safety and sponsor the world’s most comprehensive UBI experiment.”

It is an honourable and selfless person who forsakes, for the benefit of humanity, what promises to be incredibly profitable.

A Trust Relationship Has Been Established, and Sam is the Trustee.

As head of the board of directors of the non-profit company that controls OpenAI, and with his authority reinforced by the ouster of the board that fired him for a few days in November, Sam has effectively positioned himself as trustee of the planet’s potentially most valuable asset ever: AGI.

Fiduciary responsibility is acknowledged in OpenAI’s 2018 Charter, which states: “Our primary fiduciary duty is to humanity. We anticipate needing to marshal substantial resources to fulfill our mission, but will always diligently act to minimize conflicts of interest among our employees and stakeholders that could compromise broad benefit.”

With the pledge of profits from AGI to the public good, first in 2018 and recently reiterated in Sam’s blog, and with his acknowledgement of risks and responsibilities, Sam Altman has settled potentially incredible value on a trust with 7.8 billion humans as its beneficiaries. Sam has demonstrated acceptance of the burden of fiduciary duties, with actions like warning users and the public about risk of harm.

Is Sam fulfilling responsibilities as trustee of such a valuable and risky asset?

It’s on this question that we temper some of our gratitude to Sam, for the following reasons:

- To raise capital for research, OpenAI revised its 2018 Charter and created a limited partnership to develop the technology. The LP allows investors to make a return of up to 100 times their investment. While the non-profit board of directors controls the limited partnership, we have to ask: what profits will be left for the non-profit, after investors multiply their capital 100 times?

Consider that the major investor, Microsoft, as we previously noted, has privileged access to OpenAI data. Sam hasn’t told us what multiple Microsoft has been granted for its recent $10 billion investment, but if it’s a multiple of 100 then OpenAI will have to make $1 trillion (for Microsoft) before a penny goes to 7.8 billion humans. $1 trillion, which is $1,000,000,000,000, would be an outlandish return on investment eclipsing many times over the largest tech company profits of today.

On a de jure, legal, basis, OpenAI still remains a non-profit, however on a de facto, practical basis, it seems the company is now effectively and primarily for-profit. The trust that Sam has created should disclose to its 7.8 billion beneficiaries how the value of their asset – the technology that will lead to AGI – is being managed and protected, and should disclose the multiples provided to each investor. A trustee’s fiduciary responsibilities include reporting to the beneficiaries the trust’s assets, liabilities, income, and expenses, however OpenAI provides no public financial disclosures. In failing to disclose, the company does nothing to dispel questions about the even handed management that a trustee is required to exercise for the good of all beneficiaries.

In January, 2023, Microsoft announced the extension of a partnership under which, as OpenAI’s exclusive cloud provider, Microsoft’s Azure “will power all OpenAI workloads across research, products and API services.” In turn, Microsoft “will deploy OpenAI’s models across our consumer and enterprise products and introduce new categories of digital experiences built on OpenAI’s technology.”

- There is no mechanism for the beneficiaries to question the actions of the trustees, because the board of the non-profit company is not chosen by the beneficiaries and is not independent of Sam. The November 2023 turmoil of Sam’s firing, the board’s ouster, and Sam’s return to control of OpenAI within a week demonstrates that the board doesn’t answer to the public beneficiaries. How was the latest board selected? We don’t know.

Microsoft CEO Satya Nadella. Image: Wikipedia.

In a normal trust relationship, a trustee found failing to fulfill his fiduciary responsibilities can be dismissed by court action. Sam has placed himself, and by virtue of Microsoft’s investment, Microsoft’s CEO Satya Nadella, in charge of an asset (AGI) potentially worth more than anything this planet has ever seen, but the beneficiaries have no recourse except hope, and some might also pray, that the trustees discharge fiduciary responsibilities selflessly and with an even hand in spite of business interests.

History shows that the problem with money is that it can corrupt the best of intentions, and it can make enemies of friends. It’s a rare human indeed who can hold such tremendous power and unimaginable value and freely give it all away during their lifetime to the public good.

Sam started off on the right foot in this respect, with OpenAI’s Charter. But has the non-profit pledge now become window dressing, or is it, as we the beneficiaries hope, real?