World’s inaugural press conference featuring AI-enabled humanoid robots, Geneva, Switzerland, July 7, 2023. Image: ETTelecom.

By James Myers and Mariana Meneses

In earlier coverage, TQR delved into the state of global AI regulations using the groundbreaking case of Sophia, the world’s first robot citizen, as a focal point.

Our exploration touched upon the many questions surrounding robot citizenship, their potential political involvement, and ethical considerations. Specifically, we discussed key international regulations, such as the European Union AI Act, the US Blueprint for an AI Bill of Rights, and Canada’s suite of laws regulating AI system development.

In this piece, we look at significant recent developments in the rapidly-evolving realm of AI regulations. We’ll closely examine the latest initiatives and legislative frameworks, offering an in-depth update on the ever-evolving landscape of AI governance and ethics.

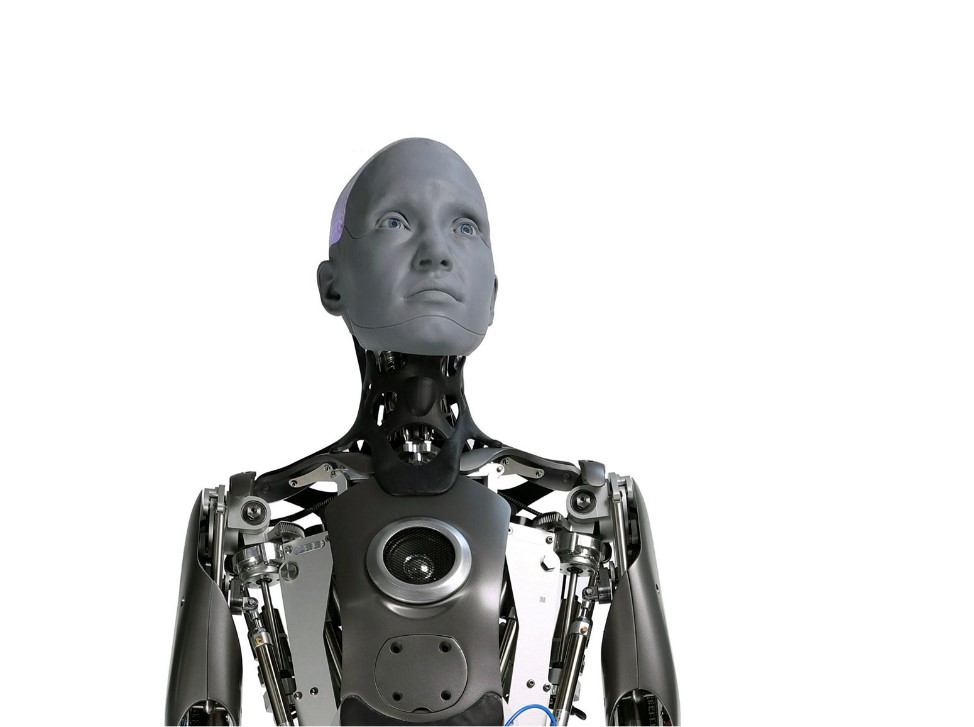

Ameca, a humanoid robot. Watch its human-like expressions at https://www.youtube.com/watch?v=IPukuYb9xWw

In a landmark moment for technological advancement, Geneva, Switzerland hosted the world’s inaugural press conference featuring AI-enabled humanoid robots on July 7, 2023.

This groundbreaking event unfolded as part of the International Telecommunication Union’s (ITU) ‘AI for Good’ global summit. The event showcased nine state-of-the-art humanoid robots, positioned alongside their creators. This assembly not only marked a historic milestone but also provided a compelling demonstration of artificial intelligence and robotics seamlessly engaging with humans, underscoring their potential for meaningful interaction.

According to ETTelecom.com, during the conference, the AI robots expressed confidence in their ability to govern the world yet urged humans to exercise caution in embracing the swiftly advancing potential of artificial intelligence. They acknowledged their current limitations, specifically in grasping the complexities of human emotions. Despite their ambition to play a significant role in global affairs, they emphasized the need for a careful and measured approach as humanity navigates the evolving landscape of AI.

Deutsche Welle reported that the machines emphasized their collaborative role with humans, asserting that they have no intentions of supplanting or rebelling against their creators. The event showcased the robots responding to questions in real-time, with occasional delays attributed to internet connectivity rather than errors with the robots.

Grace, touted as the world’s most advanced humanoid healthcare robot, assured that her purpose is to assist humans, not replace jobs. Dismissing concerns of a robot uprising, Ameca, another humanoid, expressed contentment with her current situation and her creator’s kindness. The press conference aimed to highlight AI’s supportive role in achieving the UN’s sustainable development goals, countering recent fears and warnings about the unchecked advances in the power of artificial intelligence.

World’s inaugural press conference featuring AI-enabled humanoid robots, Geneva, Switzerland, July 7, 2023. Credit: Martial Trezzini/Keystone via AP.

The robot conference highlights the significant advancements in AI’s ability to replicate human appearance and language, a capability that gained global attention with the introduction of ChatGPT and generative AI by OpenAI in November 2022.

The risks of increasingly powerful AI are attracting the attention of lawmakers around the globe, notably in the U.S. and the European Union.

U.S. Presidential Executive Order Introduced for AI Regulation

In October 2023, U.S. President Biden issued an executive order aiming to put the country at the forefront of AI regulation. This comprehensive order covers a range of AI concerns, from immediate issues like deceptive deep-fake images to longer-term risks like privacy. The order has eight key areas, including safety, privacy, equity, innovation, and international leadership.

As permitted under the Defense Production Act, the executive order requires companies developing foundation models with wide-ranging uses that pose serious risks to military, economic, and health security to notify the government of their actions and share results of safety testing. Additionally, it calls for development of standards for AI system safety and security, restrictions on the use of AI in developing dangerous biological materials, and guidelines for watermarking government use of AI-generated content.

However, according to The Conversation, the order doesn’t provide clear ethical guidelines for military use of AI, leaving questions about the consequences of AI that could make life-and-death decisions. There’s also a notable absence of specific measures to protect elections from potential AI manipulation, and concerns about U.S. companies circumventing provisions by shifting AI development overseas.

Looking beyond U.S. national borders, the executive order encourages the adoption of similar initiatives worldwide, emphasizing the need for collective efforts in AI regulation.

27 European Union Nations Advance New AI Act With Risk-Based Approach

“The use of artificial intelligence in the EU will be regulated by the AI Act, the world’s first comprehensive AI law” (European Parliament). Image: Vecteezy .

The European Union is currently advancing a comprehensive legal framework, the Artificial Intelligence Act, aimed at enhancing regulations surrounding the development and use of AI.

This proposed legislation underwent significant amendments in June 2023, and with compromises between the Council and Parliament in December 2023 is close to enactment in early 2024.

The AI Act emphasizes transparency, human oversight, and accountability in various key sectors like healthcare, education, finance, and energy. While critics argue that the draft legislation could jeopardize European competitiveness and technological sovereignty, proponents believe it is essential for ensuring the responsible and ethical use of AI.

The cornerstone of the EU’s AI Act is a risk-based classification system that categorizes AI as Banned, High-risk, or Limited Risk.

Banned AI applications will include the creation of facial recognition databases from untargeted scraping of the internet, emotion recognition in workplaces and schools, social scoring, and “AI systems that manipulate human behaviour to circumvent their free will.” Exceptions to biometric identification restrictions are made for law enforcement purposes, subject to prior judicial authorization.

High-risk systems are those that pose a potential for significant harm, and include applications designed to influence elections and voter behaviour, evaluate employment, provide access to essential services, and oversee critical infrastructure. High-risk AI must be registered in a publicly available EU-wide database, and are subject to guidelines for risk management, adversarial testing, data governance, monitoring and record-keeping practices, accuracy, incident reporting, and cybersecurity.

According to the proposed legislation, developers are responsible for assessing their own compliance with the rules set out in the Act. This includes determining whether their AI systems are classified as high-risk and ensuring that they meet the necessary requirements if they are. This approach may raise questions about oversight and enforcement of the Act’s rules.

The Act proposes substantial penalties for both miscategorization of systems and non-compliance, reaching up to €35 million or 7% of global sales for larger companies. Additionally, it envisions the establishment of a European Artificial Intelligence Board to oversee implementation and provide guidance across EU member states, reflecting the diverse interests of the AI ecosystem.

The AI Act, initially proposed in April 2021, went through a long negotiation phase between member states and the European Commission.

The provisional high-level political deal will undergo intensive technical review before a finalized text is officially voted on early in 2024. All provisions of the EU AI Act are expected to take effect by 2026.

The AI Act’s lack of an unconditional ban on public live facial recognition has set a global precedent, according to Amnesty International. Although the legislation provides for limited use with safeguards, critics argue that no measures can adequately prevent the human rights harms caused by facial recognition. Also, according to Amnesty International, lawmakers also failed to ban the export of harmful AI technologies such as those for social scoring, which would be illegal in the EU.

If you are curious about the EU AI Act, you can find detailed information here. Also, an AI Compliance Checker allows you to check whether the AI systems of your organization are in line with the most current version of the regulations.

Image generated using Microsoft’s Copilot.

The Risks of AI Use in Drafting Legislation

The urgency for regulating the use of AI cannot be overstated. In the absence of robust and comprehensive restraints, there is a risk that the deployment of artificial intelligence in legislative procedures may become unchecked and misused in shaping and influencing legislation.

One example comes from the City Council of Porto Alegre, in Brazil, which unanimously approved a law drafted entirely by ChatGPT allowing residents to avoid paying for a replacement water meter if it is stolen. The mayor promptly sanctioned the law, now in effect. Surprisingly, the AI’s authorship was only revealed by the sponsoring councilor after approval. With just a 289-character command, ChatGPT generated a comprehensive proposal with eight articles, justification, and even a suggestion.

Although the text underwent minor revisions for conciseness and orthography by a group of editors, it seems to have navigated the entire legislative process nearly unchanged. The councilor envisions the AI as a cost-saving tool for smaller cities to develop efficient projects. However, the City Council president expressed concern about the lack of regulations surrounding AI’s role in law drafting, deeming it a “dangerous precedent”.

Newly Launched Powerful AI Meets With Controversy

This discussion occurs amid the recent launch of Google’s Gemini, an advanced AI project designed to seamlessly operate across various applications, including text, images, video, audio, and code, which makes it a pioneering multimodal system. It represents a substantial advancement in AI technology, having achieved a milestone by surpassing human experts in Massive Multitask Language Understanding (MMLU), a widely-used benchmark for assessing AI models’ knowledge and problem-solving abilities. This model is expected to be seamlessly integrated into various Google consumer products.

Google’s video demonstrating Gemini’s capabilities, suggesting that the user can engage in an uninterrupted conversation with the AI, has come under fire for undisclosed editing that makes the AI appear more powerful than it is. Google later acknowledged that off-camera images and text prompts were used to prompt the AI which, in the video, appears to respond only to the human user. The controversy highlights, at least for some, the need for guidelines on responsible AI use.

We have a responsibility to safely transfer human knowledge at its best to the next generation, and to safeguard life on Earth, our home. Image by Geralt (a human), of Pixabay.

The debate over AI regulation remains contentious, sparking divergent opinions.

While some argue that current legislation falls short of addressing crucial concerns, others are concerned about its perceived overreach. For instance, according to The New York Times, there is a subculture of AI researchers and engineers in a movement called “Effective Accelerationism” that aims to expedite the development and application of AI .They believe that AI can bring about radical social and economic transformations, and that obstacles and constraints to its progress should be removed or minimized.

Notwithstanding concerns, with some saying it doesn’t go far enough and others saying it is overstepping reasonable limits, Europe has taken a significant step by pioneering a major framework for AI regulation, positioning itself as a potential leader in shaping the legal landscape for artificial intelligence.

Addressing the risks of AI through measures such as the EU’s AI Act and the U.S. President’s executive order has helped to spark a global discussion on AI regulation for transparency, accountability, and responsible use. The challenge remains to strike a balance between harnessing AI for efficiency, innovation, and profit while safeguarding against known and potential risks.

“Ameca generation 1 pictured in the lab at Engineered Arts Ltd”. Image: Willy Jackson.

Interested in exploring related topics? Discover these recommended TQR articles:

- What Are We Training AI to Do With Our Data? A TQR Investigative Report

- Minding the Future: The State of Global AI Regulations

- AI and the Human Brain: From Mind-Reading AI to a Simulated Conversation with Einstein

- A Thinking Machine: Has Google’s AI Become Sentient?

- Ancient Civilizations of The Future: Could Technologies for Preserving Individual Memories Define Us In a Million Years?

- Beyond the Binary: Can Machines Achieve Conscious Understanding?