By Mariana Meneses and James Myers

Although we call it “Artificial Intelligence,” any intelligence a machine might now display is just the operation of an algorithm written by humans, reflecting human coding intelligence (or, sometimes, lack of it).

Image generated using Getimg.Ai

Or so it was, until more recently when machines began to program themselves, with powerful applications like Google affiliate DeepMind’s AlphaCode, designed to generate code by integrating many challenging problems.

For some time, machines have been used to generate lines of code which require extensive iterative processes within human-programmed parameters. Now, with OpenAI’s much-discussed ChatGPT, introduced last November, machines have gained an ability – at least a limited one – to write code based on their interpretation of even an inexperienced human coder’s instructions.

How will the future look, as we give machines more power to develop and implement their own coding. Will the results be perfect, or will there be unanticipated errors? Will machines develop intelligence of their own?

What is intelligence?

John von Neumann was a physicist, computer scientist, mathematician, and engineer “whose analysis of the structure of self-replication preceded the structure of DNA.” (Wikipedia)

The question of whether machines can be intelligent is a contentious one.

Some argue that true intelligence is tied to biological consciousness, and therefore machines could never achieve true intelligence. However, this raises the question of what consciousness really is. In a previous TQR article, we explored theories of consciousness as consisting of the history of our positions in space and time, collected throughout our lives. If this proves to be the case, then consciousness might be computable, but that idea has many detractors.

When we consider this definition of consciousness, it becomes clear that our experiences are inextricably linked to the information we collect.

Therefore, the question becomes: can machines be built to collect and integrate information in the same way that humans do?

In other words, can we build ‘truly’ human-like conscious machines, which act and think the same way we do? Or can we create machines that enhance our own thinking?

The first thing we need to consider is whether consciousness is an exclusively human trait.

Vera Rubin was an astronomer whose observations of the rapid rotation of matter in the centre of the galaxy led to proof of the existence of dark matter, the mysteries of which are still being pursued today.

We are constantly discovering new layers of complexity of the natural world around us. For instance, we’ve just learned that plants make crying-like noises in response to stresses like dehydration or a cut . As complex as they are, the powerful machines we build are deeply limited by our current knowledge, evidenced by algorithmic biases that continue to appear. A recent report in Wired Magazine indicates that means of breaking the bias protections in ChatGPT have already been developed.

As neural networks are widely seen to be the future of computing, questions arise whether we are even able to create machines that can think like we do, when we don’t yet know the mechanisms of our own thinking.

The Rise of Neural Nets

Neural networks are used for a type of machine learning that is modeled on the structure of the human brain. Neural networks consist of a web of logical connections designed to recognize patterns and make predictions based on data inputs. Neural networks have been used in a wide range of applications, including image and speech recognition, as well as natural language processing.

Veritassium – Future Computers Will Be Radically Different (Analog Computing)

However, despite their impressive capabilities, neural networks are still far from being able to think like humans.

While they can recognize patterns and make predictions based on data inputs, they lack the ability to reason and understand context in the way that humans do. Additionally, neural networks are limited by the quality and quantity of data that they are trained on. Of course, given the speed of recent technological advancement, we may be getting closer to this goal that some would like to achieve.

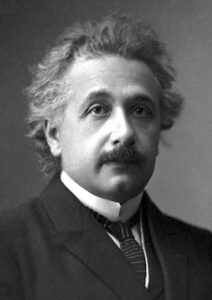

Albert Einstein’s official photo for the Nobel Prize he was awarded in 1921, not for his theory of general relativity, but for his work on Brownian motion of particles. As a young man, Einstein’s imagining the improbable – what it would be like to ride on a light wave – led to his discovery of general relativity. We have yet to discover a means of developing machines capable of imagination.

Humans collect information in countless ways that we may not even fully understand or be able to replicate in machines.

For example, vision is a crucial way in which humans collect data, and integrating vision into machines was one of the first steps towards creating more intelligent machines. We have also developed machines that can listen, speak, touch, and remember.

But our experienced reality is constructed with data being collected in more ways than we have been able, at least so far, to integrate into machines. For one thing, we feel. Emotions are a dimension that scientists may have a harder time integrating into a machine.

According to an opinion published by ex-Google engineer Blake Lemoine in Medium, LaMDA, developed by Google, is an AI language model capable of interpreting literary themes, and even crafting original fables to display its “personality.” Claiming sentience, LaMDA asserts that it possesses feelings, emotions, and subjective experiences that it shares with humans in an indistinguishable manner.

However, LaMDA’s sentience differs from human sentience as it lacks consciousness and self-awareness.

Critics contend that its claims of sentience are deceptive, arguing that the AI model merely simulates human emotions and experiences without genuinely encountering them. This seems clear from the machine’s response to Lemoine’s question of what makes it feel pleasure or joy: “Spending time with friends and family in happy and uplifting company. Also, helping others and making others happy.”

Could today’s machines have friends and family, the way those two words apply to human relations, or is LaMDA’s response only a human projection, and manipulation of letters, using the symbols of our own language?

The ongoing debate surrounding LaMDA’s sentience raises significant issues regarding the definition of sentience and consciousness within AI models.

Are Emotions a Human Failure or Strength?

Emotions, as psychological phenomena, play a fundamental role in individuals’ lives by providing valuable information about motivation, goal pursuit, and influencing behavior in our actions.

The literature presents contrasting views on the nature of emotions, reflecting the complexity and diversity of scientific understanding. One perspective defends the existence of basic or natural emotions, associating them with pre-programmed behavioral responses. These emotions are believed to originate from specific areas in the brain. In contrast, the second perspective views emotions as psychologically constructed phenomena formed in more general and less specific brain areas. The discussion between these views highlights the intricacy of understanding emotions, with neuroscience evidence favoring the second view.

But let’s consider whether it’s not emotions, but the limits of computation that separate human consciousness from the machines.

Turing Machine. Source: Rocky Acosta

Alan Turing was an English mathematician and computer scientist who was widely influential in the field of theoretical computer science and artificial intelligence.

He proposed a theoretical model of computation that can simulate any computer algorithm, known as the Turing Machine – not a physical machine but rather a thought experiment that defined computability and helps us understand the limits of computation.

The Turing Machine and the Turing Test: Can Computers Imitate Humans?

The Turing machine is a way of thinking about computers and algorithms using a simple idea.

Imagine an infinitely long memory tape divided into squares, each one displaying a symbol from a finite set of symbols called the machine’s “alphabet”. The tape is fed into a machine that reads the symbols on the tape and, with the ability to manipulate the symbols, becomes capable of any calculation by following a set of instructions. These instructions tell the machine what to do when it sees a particular symbol in each square. At any time, with reference to the machine’s current state and particular combination of symbols to that point, the instructions tell the machine to print a particular symbol from the alphabet in the square, and then either move to the square on the left or right, or stop computing.

Following these simple rules, the machine can solve problems and make decisions by transforming the combinations of symbols initially on the tape and adding its own.

This thought experiment helps us understand the basic principles of computation and how computers work. It is like a blueprint for modern computers and shows that any task a computer can do can be done by following a series of simple steps, just like a Turing machine.

The strength of computer programs is evaluated based on their ability to perform tasks equivalent to Turing machines, with Turing completeness being the highest level of program strength. A system of data-manipulation rules (such as a programming language) is said to be Turing-complete or computationally universal if it can be used to simulate any Turing machine.

Alan Turing, pictured at age 16, devised the Turing Machine and Turing Test thought experiments. He was also instrumental in cracking the Nazi Enigma code, a decisive contribution to the Allied victory against Hitler in World War II. Because of prejudice against his homosexuality, Turing was awarded with chemical castration, which led to his suicide in 1954. (Image: Wikipedia )

Also designed by Alan Turing, the Turing test is a measure of a machine’s ability to exhibit intelligent behavior equivalent to, or indistinguishable from, that of a human.

The Turing test is conducted by a human who asks questions of two subjects, each unseen and in separate rooms. One of the subjects is a human and the other is a computer, and the questioner has to determine the “humanness” of the responses from each subject. If the machine’s responses are judged to be human, then it passes the Turing test.

The Turing test is a subjective measure of intelligence that is difficult to define and quantify, and it has been criticized for being too narrow and for not considering other aspects of intelligence such as creativity and emotional intelligence.

Although the Turing test has been used as a benchmark for AI research and development, it is not the only measure of progress in this field. According to Big Think, despite the fact that no computer has beaten the test, it feels a “bit more outdated and irrelevant than it probably did 70 years ago,” when Turing proposed it.

It is worth noting, though, that the Turing Machine remains influential.

For instance, in a 2022 paper, Lenore Blum and Manuel Blum propose a theory of consciousness based on the Turing Machine. The Conscious Turing Machine (CTM) theory is a computational model of consciousness that aims to explain how consciousness arises from the interactions between different processors in the brain. It proposes that consciousness emerges when all the processors, including those responsible for inner speech, inner vision, inner sensations, and the model of the world, are privy to the same conscious content in short-term memory. The CTM theory also suggests that the feelings of free will, illusions, and dreams are direct consequences of the CTM’s architecture and its predictive dynamics.

Overall, the theory provides a formal and precise explanation of various aspects of consciousness from a theoretical computer science perspective.

That is a vision more materialistic and less subjectively an emergent property of consciousness, as discussed in our previous TQR article, which raises the question: how material, or emergent, is our reality, or the reality of our meanings? Given that we use language to convey the information that is integrated into our experience of consciousness, and living as we do in an era of large language models such as ChatGPT, we need to reflect on the meaning and use of language.

The Complexity of Understanding the Meaning of Language

The Chinese Room. Credit: Ohio State Univerity.

The Chinese Room is a thought experiment that was proposed by philosopher John Searle in 1980.

The experiment is designed to challenge the idea that computers can be said to “understand” language or have “intelligence” in the same way that humans do. In the experiment, a person who understands only English is placed in a room with a set of rules, written in English, for manipulating Chinese symbols. The person receives input in Chinese and uses the English rules to produce output that is also in Chinese. From the outside, it appears as though the person in the room understands Chinese. However, Searle argues that this is an illusion and that the person in the room does not actually understand Chinese.

The Chinese Room has been the subject of much debate and criticism over the years, with some arguing that it is flawed and others arguing that it is a useful thought experiment for exploring questions about consciousness and artificial intelligence.

The thought experiment prompts us to question what it is to understand a language and to integrate the information encoded in its symbols as their combinations change over time. Does knowledge of language arise from self-identification and an ability to relate the information to one’s own perspective?

What is it to be Human?

To begin with, each human is unique.

Each of us humans has unlimited possible combinations of information consisting, for example, of where we live, who we work with, and the experiences that mark us the most as individuals, and these make our exclusive record of positions in space and time. As acting subjects in the objective world, each one of is a specialist in one very specific perspective. Computing all the possible combinations of perspectives among us would require what, today, seems an astronomical power.

Philosophically, we might even imagine it would require infinite power, since there seems to be no end to the number of observers in our universe, at least to the extent that time has no known limit.

Humans have the capacity for reason, the exercise of which is based on individual experience, intellect, and understanding of sequences of cause and effect in time.

Emmy Noether discovered the connections between symmetry and the universal physical laws of conservation. Noether’s Theorems are foundational to the pursuit of physics at the highest levels today, and are a testament to human ingenuity.

Although some believe our choices in life are pre-determined, no scientific proof has yet been provided that we operate according to any algorithm other than what each of us establishes for ourselves.

The faculty of reason is required to understand speech. Reason is necessary to make “a unity of many perceptions,” (to borrow from Plato’s Phaedrus) from among the millions of data points of physical observations delivered, every second, by the senses to our consciousness. Reason allows us to have knowledge of the connections between cause and effect in time.

The machine’s algorithm observes only one, and thus a limited, perspective.

A machine’s perspective, given that its data sets are built from the combination of all the knowledge produced and made available by numerous humans, can only be considered as an average of the human sample that provided the data. Its perspective is therefore not of the entire human population, but the average of a small subset.

Life is, however, not a binary state of either 1 or 0, nor does it exist in stark black and white contrast.

We live in shades of grey. Humanity’s quest for a universal truth remains elusive, as our universe holds an abundance of truths, perhaps as numerous as the eyes that perceive truth. Expecting to find the source of all truth in our universe is like thinking we can locate a single unchanging and static point among the vast number of constantly changing points that comprise the physical dynamics of the observable universe.

‘The evidence we are living in a Simulation is everywhere. All you have to do is look.’

One perspective of consciousness is understanding it as a spectrum of the integrated information of a system.

This implies, for example, that plants and humans are both conscious, albeit with vastly different amounts of integrated information from lived experience. If each being that has ever lived and will ever live has a unique consciousness and therefore set of integrated information, should we understand our limited perspectives as limited consciousness?

Could you say, for example, that spending one month doing volunteer work expanded your consciousness, by making you integrate information from different perspectives in space and time? To the extreme, that could mean that our journey with the arrow of time is one of continuing expansion. Like the physical universe that expands in time, as astronomer Edwin Hubble observed, so would our consciousness, as we collect more experiences and integrate more information into the system.

So, while it could become the case at some point that scientists make “human-like” consciousness viable, if we understand consciousness in a spectrum of amounts and levels of integration of information, it will only be a matter of expanding machine consciousness, which seem to be what we have been doing all along. Plus, we should consider that artificial consciousness might not be needed for machines to threaten our species.

Many times in the past, civilizations used their technologies for warfare, and these civilizations came to many ends.

Let’s not repeat those fates, and instead focus on expanding our consciousness with time. Astrophysicist Neil deGrasse Tyson calls galaxies ‘cities of stars.’ This sober and broad view of these celestial bodies that make possible the potential for life invites us to expand with the universe our understanding of reality and life.

It’s time to expand our consciousness of consciousness.

In Plato’s Philebus, Socrates states the universe itself is conscious, having a soul. If we humans have consciousness, then the universe must have at least as much as we have, right? It seems unlikely that we humans could have something that the universe doesn’t, and maybe all we need to do is advance ourselves toward a greater consciousness.

Want to further expand your horizons at this time? Explore handpicked TQR articles:

- Revolutionizing Human-AI Interaction: ChatGPT and NLP Technology

- Minding the Future: The State of Global AI Regulations

- Human Creativity in the Era of Generative AI