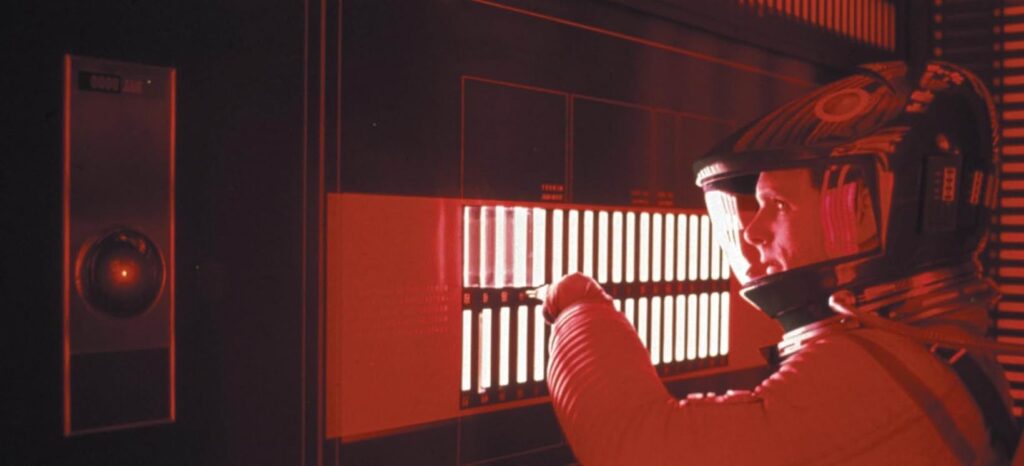

HAL 9000 was the computer in Arthur C. Clarke’s fictional 2001: A Space Odyssey programmed to deliver a human crew to Jupiter. The computer’s logic failed and, after it murdered all but one of the crew, its operation was finally halted by the sole survivor. Image from the 1968 classic film by Stanley Kubrick, which was reviewed in our January 2023 feature, from imdb.com.

By James Myers

We now have generative AI like OpenAI’s GPT-4 and Google’s Gemini chatbots that mimic human behaviour, and supercomputers that use vast arrays of variables to model climate patterns, molecular dynamics, and optimization problems. There’s no doubt that we are developing machines with extremely powerful computational abilities.

Existing computing power will be greatly amplified, perhaps exponentially, when quantum computers become fully functional. A new proof, however, implies that as powerful as we can make our machines, there is a theoretical limit to what they can compute. When we reach the limit of computation, where will we turn next?

The theoretical limit and practical limits of computation are different things.

Hans-Joachim Bremermann calculated the physical limit of computation. Image: Wikipedia .

Before we encounter the theoretical limit, there are of course many practical barriers to computation, including factors like storage space and other physical constraints such as energy consumption and size of the machine. The laws of physics place physical constraints on computing, which mathematician and biophysicist Hans-Joachin Bremermann (1926-1996) calculated as the maximum rate at which data can be processed by any independent material system.

Bremermann’s limit is based on the speed of light, (denoted by c), and Heisenberg’s Uncertainty Principle which involves the Planck constant, (denoted by h, which equals the energy of a particle of light divided by its frequency and is effectively the minimum measurement of the action of light). Bremermann’s physical limit of computation is c2/h, which approximates a staggering 1.3563925 × 1050 bits per second per kilogram.

In 1981, Jakob Bekenstein calculated what is now known as the Bekenstein bound, which is the area limit in which information can be stored. The Bekenstein bound says that, for instance, the maximum information that can be stored in a black hole is equal to the number of Planck areas that would be required to cover the black hole’s boundary which is called the event horizon.

A simple computer’s theoretical limit of computation was discovered by a collaboration of scientists and citizen scientists.

Tristan Stérin launched the Busy Beaver challenge. Image: Tristan Stérin.

The Busy Beaver challenge to determine the theoretical limit of computation was launched in 2022 and with the help of twenty contributors, the majority of whom did not have academic credentials, recently announced a conclusion based on the mathematics of computation. The Busy Beaver challenge was initiated by Dr. Tristan Stérin, who holds a PhD in computer science, and the answer it provided is 47,176,870.

That’s the maximum number of computational steps that are theoretically possible in the operation of a simple computer, one whose instructions can be listed in a table and operates with five rules, or instructions. The table consists of a grid of squares, each row containing a computational rule with two columns for each instruction consisting of outputs, one column containing a 0 and the other containing a 1 like the outputs of bits in today’s computers.

The full story of the Busy Beaver challenge, its conclusion, and the origin of the beaver analogy from a 1962 paper by mathematician Tibo Radó, is in a Quanta Magazine feature published this month.

Alan Turing, pictured at age 16, devised the Turing Machine and Turing Test thought experiments. He was instrumental in cracking the Nazi Enigma code, a decisive contribution to the Allied victory against Hitler in World War II. Because of prejudice against his homosexuality, Turing was awarded with chemical castration, which led to his suicide in 1954. (Wikipedia)

The problem that Stérin was aiming to assess with the Busy Beaver challenge is called the “halting problem,” because the goal was to determine the number of computational steps that could be performed before the device reaches a limit of, or halts, its processes. The simple computer is better known as a Turing machine, first proposed by mathematician Alan Turing in 1936 as a way of thinking about algorithms and computers.

As we described the Turing machine in our June 2023 article Beyond the Binary: Can Machines Achieve Conscious Understanding?, imagine an infinitely long memory tape divided into squares, each one displaying a symbol from a finite set of symbols called the machine’s “alphabet”.

The tape is fed into a machine that reads the symbols on the tape and, with the ability to manipulate the symbols, becomes capable of any calculation by following a set of instructions. These instructions tell the machine what to do when it encounters a particular symbol in each square. At any time, with reference to the machine’s current state and particular combination of symbols to that point, the instructions tell the machine to apply a particular symbol from the alphabet in the square, and then either move to the square on the left or right, or stop computing.

Following these simple rules, the machine can solve problems and make decisions by transforming the combinations of symbols on the tape and adding its own.

For example, one rule might be, “If you encounter a 0, replace it with a 1, move one step to the right, and consult rule X,” in the first column, and “if you encounter a 1, leave it unchanged, move one step to the left, and consult rule Y,” in the second column. All rules follow a similar pattern, except for one special rule that tells the machine when to halt its calculations.

The Turing machine was a thought experiment by Alan Turing designed to address mathematician David Hilbert’s goal to automatically derive every true mathematical statement. He envisioned a machine that could decide whether any given problem was mathematically calculable, outputting either ‘yes’ or ‘no’ represented numerically by a 1 or a 0.

A prototype of a Turing machine. A real Turing machine would have a tape that is infinitely long. Image: Rocky Acosta.

As computer scientist Dr. Apostolos Syropoulos explains in Philosophy Now, Hilbert’s motivation appears to have come from the series of axioms famously used to prove geometric and mathematical relationships in The Elements, written by Euclid 2,400 years ago. Hilbert’s idea was that if it’s possible to prove or disprove any mathematical or geometric axiom then it should be possible to represent the axioms symbolically – for instance, using 1’s and 0’s – and compute their relationships.

What’s next for computing, and for what we can imagine?

Mathematician Kurt Gödel, 1906-1978, established the Incompleteness Theorems.

Although Hilbert’s program to prove any axiom was later demonstrated to be impossible by mathematician Kurt Gödel’s Incompleteness Theorems, which establish that every theorem depends on a preceding theorem and the first theorem is unknowable, the question remained what limit would be computable.

Our knowledge is constrained not only by Gödel’s Incompleteness Theorems, but also by Heisenberg’s Uncertainty Principle, a foundational principle in quantum mechanics that we mentioned above. The Uncertainty Principle, which applies at the universal minimum of the Planck constant, says that the more we know about the physical position of an object in space the less we know about its momentum in time, and vice versa.

Now that the five-rule busy beaver problem has been addressed, the hunt can begin for the sixth busy beaver and then presumably the halting limits for as many rules as can be imagined thereafter.

Using specific rules, a computation provides an exact output for a sequence of inputs.

The proof that there is a computational limit associated with a given number of rules implies that a rules-based approach to associating inputs with outputs can only get us so far in figuring out a universe that’s potentially infinite. It might make us wonder what, if anything, exists beyond computational limits, and whether a long-sought theory of everything could ever be attained.

We can imagine whether something lies beyond the limits of a simple computing device like a Turing machine, or a function that’s far more complicated like the operation of space and time. In asking the question, it seems important to consider that a computing device of any complexity cannot, as Dr. Syropoulos points out, solve its own halting problem although it can solve the halting problems of other machines.

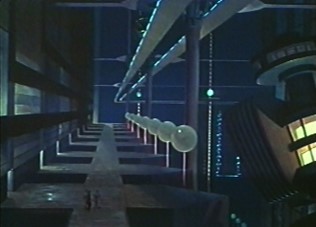

The 1956 classic science fiction film Forbidden Planet featured a supercomputer that could fulfill every wish, created by a long-lost civilization of beings called the Krell. To their detriment, the Krell forgot about one key factor in programming: their own ids.

This limitation for machines seems, in a way, like the limitations that Gödel’s Incompleteness Theorems and Heisenberg’s Uncertainty Principle place on human knowledge: no machine is capable of determining the limits for an unlimited number of rules, and the knowledge of each machine is dependent on another machine.

“Our next question,” Dr. Syropoulos asks, “is: What machine is on the top of the ladder? This apex machine would be able to decide the limits of computability for all other machines, and so define the absolute limits of computability.”

Syropolous recalls that in 1969 computer scientist and engineer Konrad Zuse proclaimed that the whole universe is a “cellular automaton,” a computing device using grid-like cells perhaps not unlike a Turing machine. Zuse’s imagined universal design seems unknowable, thanks to Gödel and Heisenberg, and so what does that say about the computability of our knowledge?

“Can we now say that the human brain is a computing device?” Syropoulos asks, and he concludes, “Therefore the human mind evidently transcends the computing capabilities of Turing machines.” Nobel Prize laureate physicist Sir Roger Penrose would seem to agree. Penrose argues that “whatever consciousness is, it must be beyond computable physics.”

Understanding that consciousness transcends computations could place the philosophy in developing generative AI that mimics human behaviour in a whole new light, one in which mimicry is the result of mere computation and where imagination is far greater than mimicry.

Is the new proof of a computational limit therefore ultimately a proof of the transcendence of the human imagination, something that should therefore be valued far more than algorithms that are ultimately constrained?