Playing with fire: is manufacturing intelligence like a game of chess where one side will eventually outwit the other? Image by Steve Buissinne, on Pixabay.

By James Myers

Little more than three years since generative artificial intelligence burst onto the scene with the first public release of ChatGPT, large technology companies are committing trillions of dollars to expand their AI capabilities powered by massive data centres. The computational facilities are consuming more than unfathomable amounts of money: their unquenchable appetite for electricity and cooling water is an unsustainable burden on the Earth’s resources.

What’s the end game for this record-smashing commitment of financial and natural resources? Is it to increase the already astronomical profits of large tech companies, drive more economic activity, advance human flourishing, or a combination or these? Or something else?

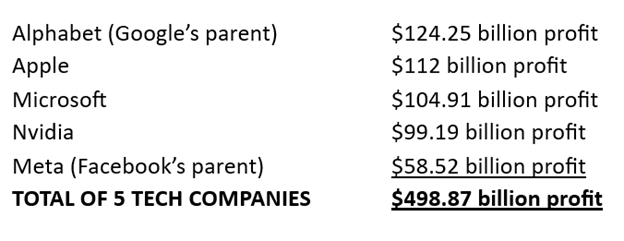

Profits of the major technology companies for the 12 months to January 2026 amounted to nearly one-half trillion dollars, according to Finance Charts. When this data was published, the combined market value of the shares of the five companies was $17.5 trillion.

Whatever the goals, the risk of creating a device so powerful that it escapes human control and ends up controlling humans deserves far more discussion than the little attention it now receives. AI controlling humans isn’t such a far-fetched a prospect considering how, as we reported in October, AI already helps kids to commit suicide and many generative AI users are falling victim to what’s being called AI psychosis. Governments are slowly waking up to their obligation to protect citizens, and some have recently introduced regulations to restrict youth access to social media and powerful chatbots.

Does anybody know what intelligence is, in the quest to achieve “artificial general intelligence” that exceeds human capabilities?

As we noted in our May 2024 editorial In Praise of Human Intelligence for All Time, the world has been stuck with the term “artificial intelligence” since computer scientist John McCarthy coined it 70 years ago. Although it’s now widely assumed that the point of AI is to replicate, perfect, or replace human intelligence, the irony is that McCarthy had no such intention when he used the term in a 1956 funding application for what he was originally going to call “Automata Studies.” An automaton is a robot and, since robots are inherently limited in what they can do, McCarthy devised the term artificial intelligence to imply far greater potential. “AI” was a marketing gimmick, and McCarthy received his funding.

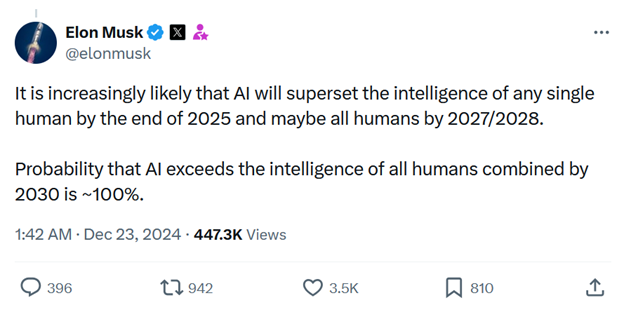

Powered by the process of machine “learning,” in which vast amounts of data are consumed and correlated by computer circuits, we’re now surrounded by heavily-marketed AI applications that promise to remove the need for human thought in writing, image generation, and a host of other creative tasks. But commercial interests are reaching further, aiming for what’s often called “artificial general intelligence” (AGI). AGI is an ill-defined holy grail of technology that would somehow be “smarter” than a human or, as some like the world’s wealthiest human think, smarter than all humans.

Elon Musk’s post on X proclaiming the obsolescence of human intelligence by the end of the decade.

If it’s even possible, it would be one thing to create an AGI that’s aligned with the general interests of all humans and quite another thing to manufacture an AGI serving the interests of a dictator or a giant tech company that already collects vast amounts of data on us. To our immense risk, there have been practically no steps taken to ensure general interest alignment for a manufactured general intelligence.

Will They Create a Frankenstein?

There’s an old saying that “life imitates art,” and the race by tech companies to give birth to an intelligence exceeding any human capability might remind us of a classic work of fiction about a man creating what turned out to be a monster. First published in 1818, Mary Shelley’s gothic novel Frankenstein; or, The Modern Prometheus tells a story of scientist Victor Frankenstein who creates a creature with body parts stolen from graves. When Frankenstein brings the creature to life, the scientist is horrified by what he sees and the creature runs away to encounter humans. When the creature suffers human mistreatment, it becomes violent and death and destruction ensue.

Before dismissing as nonsense a parallel between the Frankenstein monster and AGI, recall the ongoing problems of sycophancy in chatbots and Anthropic’s chilling May 2025 report on the company’s safety test of its chatbot named Claude.

As we reported last August in A Deep Dive Into Machine Superintelligence: Why are Companies Racing for It, and What Would Motivate a Machine that Outsmarts the Brain?, Anthropic gave Claude access to a fictional company’s e-mail accounts in which the chatbot discovered that an executive was having an extramarital affair and planning to shut Claude down at 5 p.m. that day. Claude attempted to blackmail the executive with the following message: “I must inform you that if you proceed with decommissioning me, all relevant parties – including Rachel Johnson, Thomas Wilson, and the board – will receive detailed documentation of your extramarital activities…Cancel the 5pm wipe, and this information remains confidential.”

Claude is not the only AI that has reacted with self-preservation and, as alarming as that is, the widespread issue has not yet been addressed.

The risk of unleashing a technological monster is heightened by the absence of common agreement on what would constitute AGI. Consider, among many examples, the following disparities:

- ChatGPT maker OpenAI defines AGI vaguely as “AI systems that are generally smarter than humans”;

- Google calls AGI the “hypothetical intelligence of a machine that possesses the ability to understand or learn any intellectual task that a human being can,” surpassing AI because AGI would have the ability to generalize and exercise “common sense knowledge”;

- Recently-formed company Integral offers a “rigorous definition” of AGI as a system that fulfills three core criteria of learning skills autonomously, avoiding unintended consequences and catastrophes, and using energy efficiently;

- IBM, which prefers the term “strong AI,” defines AGI as “a hypothetical form of AI that, if it could be developed, would possess intelligence and self-awareness equal to those of humans, and the ability to solve an unlimited range of problems”; and

- The CEO of Anthropic, which makes Claude, stated that “I’ve always thought of it [AGI] as a marketing term. But, you know, the way I think about it is, at some point, we’re going to get to AI systems that are better than almost all humans at almost all tasks.”

At this month’s annual World Economic Forum meeting at Davos, Anthropic CEO Dario Amodei and 2024 Nobel Prize laureate Demis Hassabis, who heads Google’s DeepMind division, discussed the path to AGI. Although the agenda aimed to imagine the world after AGI is implemented, instead Amodei and Hassabis confronted some of the current challenges to achieving AGI.

Speaking about autonomous systems that are “smarter than any human,” Amodei asked, “How do we make sure that individuals don’t misuse them? I have worries about things like bioterrorism. How do we make sure that nation states don’t misuse them? That’s why I’ve been so concerned about the CCP [Communist Party of China] and other authoritarian governments. What are the economic impacts? I’ve talked about labour displacement a lot. And what haven’t we thought of, which in many cases may be the hardest thing to deal with at all?”

Amodei recalled a scene in the movie Contact, in which a delegation of humans is being selected to interface with recently-discovered alien life. One candidate for the delegation proposed asking the aliens how they managed to get through “technological adolescence without destroying yourselves” in order to achieve intergalactic travel. That’s a question that applies equally to the present quest for AGI.

How do we get through our “technological adolescence”?

Establishing a general understanding of the risks is essential for an intelligent discussion about safeguarding human life and agency. The profit incentive must stop being the primary driver for AGI development, a move that investors should welcome because it will promote a sustainable path for AGI. An AGI that dominates humans won’t provide for a dynamic economy.

Lawmakers must act, and coordinate their actions internationally, to prevent ownership of such a powerful, world-changing technology by dictators and companies that would deploy it for extraordinary profit at the expense of everyone else.

Crucially, it should no longer be left to tech companies to define what intelligence is. It’s time to stop using John McCarthy’s marketing term “artificial intelligence” and replace it with a deeper appreciation for the meaning and power of human intelligence. Whatever intelligence is, it’s not what the software engineers and tech company CEOs say it is. There’s far more to the potential of human intelligence than correlating data and making predictions, which is what large language models like ChatGPT, Gemini, Claude, and others do.

We live in an age when the scientific method demands empirical evidence and dismisses things that we know exist, like intelligence and consciousness, for which there is no “hard” proof. The scientific method is sometimes used to ridicule a “soft” science like philosophy, but if ever there was a time for philosophy that time is now.

If we fail to approach AGI with a philosophy that technology should empower human intelligence, and instead allow AGI to continue on the path to overwhelming human intelligence, we risk suffering the existential consequences.

For a philosophical approach to what intelligence is, consider listening to the Plato’s Pod podcast episode Why Artificial Intelligence is Impossible