A human and a pig having a conversation: could this be the future? (Image generated by Ideogram AI)

By Mariana Meneses

Language changes how we think, by providing the boundaries to our constructed reality while even language itself changes over time.

In her captivating TED talk, “How language shapes the way we think,” cognitive scientist Lera Boroditsky delves into the intricate relationship between language and cognition, drawing attention to compelling examples that illustrate the connections.

Dr. Boroditsky provides the example of the Kuuk Thaayorre, an Aboriginal community in Australia, who eschew terms like “left” and “right” in favor of cardinal directions like North and South for all spatial orientation. Dr. Boroditsky vividly describes how this linguistic feature profoundly influences the Kuuk Thaayorre worldview, where simple greetings entail sharing one’s direction of travel. Through such anecdotes, Boroditsky illustrates how language structures our thoughts and perceptions in profound and often unexpected ways.

Neuroscience News reported on a 2023 study suggesting that being bilingual enhances the brain’s predictive and memory functions.

Bilinguals, who are people who speak two languages, constantly select from a larger pool of potential words, training their brains for an increase in cognitive functions. The study found that bilinguals with high proficiency in their second language showed enhanced memory and prediction abilities compared to monolinguals and bilinguals with low second-language proficiency. Eye-tracking data supported this observation, showing that bilinguals focused longer on objects with overlapping word sounds, leading to improved memory retention – an advantage that was most pronounced in those with high proficiency in their second language.

But how about learning interspecies communication?

This is becoming more and more attainable, as developments in AI are being explored by scientists in many fields, from archeology (so we can communicate with humans in past) to biology (so we can communicate with other animals, in the present).

“Arrival” is a 2016 science fiction film directed by Denis Villeneuve and based on the short story “Story of Your Life” by Ted Chiang. The movie features humans discovering a geometric means for communicating with extraterrestrials. Image: The Long Shot

We might even imagine, one day, communicating with species not from Earth.

“Arrival” is a movie about a linguist named Louise Banks who is recruited by the US Army when twelve alien spacecraft appear around the world. The main task for Louise is to figure out how to communicate with these extraterrestrials and understand their purpose on Earth before conflict erupts over fear of their presence. Louise used a combination of linguistic field research methods along with her intuition to identify patterns in their language, but she could have benefited from some AI help.

Luke Farritor, scroll-decipherer.

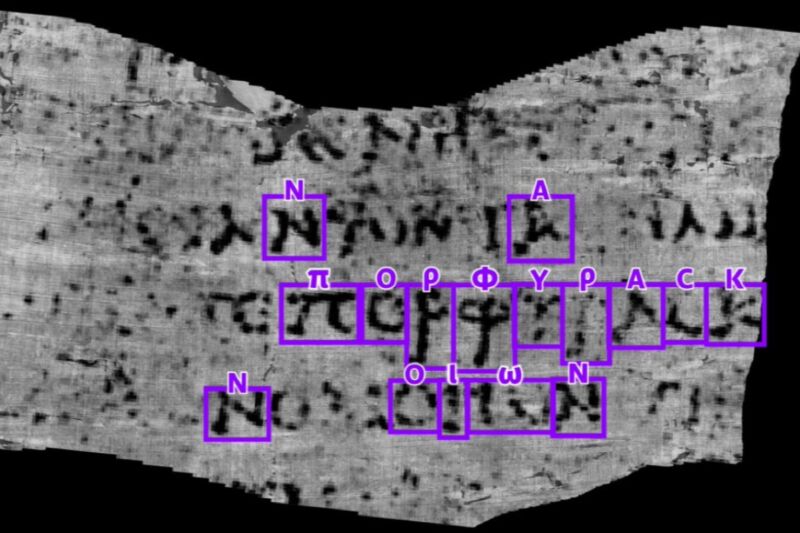

Highlighting AI’s usefulness in deciphering language, in October 2023, a computer science student, Luke Farritor, made a groundbreaking discovery by successfully deciphering text from a badly charred ancient Roman scroll using a machine learning model.

This achievement, which earned Farritor a $40,000 prize, was part of the Vesuvius Challenge aiming to decode these previously unreadable scrolls. Found in the ruins of Herculaneum, which along with Pompei was destroyed when the volcano Vesuvius erupted in 79 AD, the scrolls have puzzled scholars for centuries due to their fragility. Farritor’s breakthrough, along with others like Youssef Nader, who received a $10,000 prize for similar work, offers hope for unraveling more of the scrolls’ mysteries, potentially transforming our understanding of ancient Roman life and philosophy.

The innovative use of technology, including X-ray scanning and machine learning, has opened up new possibilities for deciphering ancient texts and could lead to further discoveries.

With the help of AI, the first words deciphered meant “purple dye” or “cloths of purple.” Image: Vesuvius Challenge/University of Kentucky

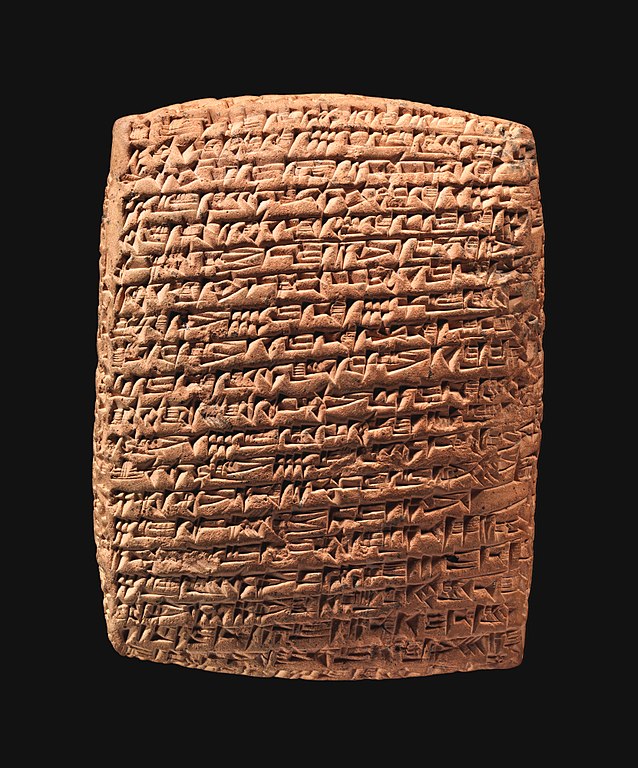

In another example, helping shed light on the history of ancient Mesopotamia, a recently published study used computer programs to translate ancient Akkadian language into English, achieving high-quality translations and advancing access to ancient Mesopotamian culture, with broader implications for uncovering archaeological secrets.

The Akkadian Empire marked a significant turning point in the history of ancient Mesopotamia, emerging as the first empire following the enduring dominance of the Sumerian civilization. Centered in the city of Akkad and its surrounding region, this empire managed to unite both Akkadian and Sumerian speakers under a single rule in an area that encompassed modern-day Saudi Arabia, Bahrain, and Oman.

Map showing the approximate extension of the Akkadian empire during the reign of Narâm-Sîn (2254-2218 B.C.). Credit: Sémhur .

The study used two methods to translate Akkadian, one of the earliest writing systems in human history, into English.

One method translated directly from cuneiform to English and the other used transliteration, which is a way of representing cuneiform using the Latin alphabet. The study found that both methods produced high-quality translations, which is a big step towards making the cultural heritage of ancient Mesopotamia more accessible to modern understanding.

Old Assyrian Cuneiform tablet. Credit: Metropolitan Museum of Art

The zenith of the Akkadian Empire’s political power occurred during the 24th and 22nd centuries BC.

Its historical record poses certain challenges due to the limited availability of epigraphic sources (i.e., writings engraved on stone, metal and other materials), a situation exacerbated by the elusive nature of the empire’s capital, Akkad, which remains undiscovered to this day. However, scholars have managed to glean valuable insights into this ancient civilization through the discovery of cuneiform tablets unearthed in cities under Akkadian rule. These tablets have provided windows into the administrative and cultural aspects of the empire’s operation.

The Akkadian language is characterized by its use of grammatical cases and a system of consonantal roots, typical of Semitic languages. Grammatical cases are used to show the relationship between the noun and other words in a sentence.

For example, in English, we use prepositions like “in” or “on” to show location, while in Akkadian, the noun itself would change its form to show its relationship to other words in the sentence. A system of consonantal roots means that words are formed from a set of consonants that carry the basic meaning of the word, with vowels and other consonants added to create different forms of the word. This is typical of Semitic languages, of which Arabic and Hebrew are two examples.

“The Semitic languages are a branch of the Afroasiatic language family. They include Arabic, Amharic, Hebrew, and other ancient and modern languages.” (Wikipedia).

AI is transforming the study of ancient Mesopotamia by providing a voice to its past inhabitants, as if we handed them smartphones to share their stories with us. Image generated using Ideogram AI.

The implications of translating ancient languages are numerous.

One of the most significant is that it allows us to better understand the cultural heritage of ancient civilizations. By translating their texts, we can gain a deeper understanding of the history, beliefs, and customs of these civilizations that contributed to the evolution of modern society. As we continue to uncover many archaeological secrets of ancient civilizations, what else might we come across?

Birds’ dictionary might be a thing in the future. Credit: Image generated using DALL-E.

Moreover, AI will also potentially revolutionize our comprehension of non-human communication.

New technology in AI and language processing has changed how we translate languages. By matching geometric patterns in the shapes of symbols and tones, machines can process and translate languages on a large scale. These tools are providing us with the ability to translate text automatically with accurate semantic representations in other forms, enabling us to explore new methods of communication and meaning.

“More than 8 million species share our planet. We only understand the language of one.”

This statement motivates the Earth Species Project, which is utilizing AI and machine learning to interpret the communications of various species. Drawing on decades of research in bioacoustics and behavioral ecology, they are developing models that also aid in ongoing animal behavior research and conservation efforts. The team envisioned four stages in their journey to decipher the signals of other species using modern machine learning.

Dr. Benjamin Hoffman, Earth Species Project Senior AI Research Scientist

The first stage is to create benchmarks and data that can be checked by experts. The second step is to learn from animal behavior using machine learning models, followed by the third stage, which is to train models to understand language without direct help. In the final stage, they want to create new signals to communicate with other species. The scientists work with biologists and AI researchers to achieve these goals, and there are currently five projects listed on their website.

The Self-Supervised Ethogram Discovery research project is led by Benjamin Hoffman, whose team uses machine learning (ML) and self-supervised models for automated discovery of animal behavior patterns in large-scale unprocessed sensor information.

They intend to create a diverse collection of animal-attached tags, or ‘biologgers’, to build datasets with behavior labels that are already known to be accurate, make the data and code publicly available, and encourage contributions from other researchers in the new field of computational ethology. This will help scientists measure how an individual’s behavior is affected by external factors.

A biologger is a small electronic device that can be attached to an animal to record its movement, behavior, and other physiological parameters, such as heart rate, temperature, and muscle activity. Biologgers are commonly used in research to study animal behavior, ecology, and physiology in their natural habitats.

Another project underway is the Benchmark of Animal Sounds (BEANS).

Although machine learning is increasingly being used to analyze bioacoustic data in research projects, there are ongoing challenges, such as the diversity of species being studied and the limited availability of data.

To address these, Senior AI Research Scientist Masato Hagiwara has developed BEANS for bioacoustics tasks. It measures the performance of machine learning algorithms over a diverse set of species and includes 12 datasets covering various animals. The benchmark aims to establish a new standard dataset for ML-based bioacoustic research and is publicly available to encourage data sharing and allow for comparing new techniques to ones that already exist.

In the research program Crow Vocal Repertoire, Dr. Benjamin Hoffman is collaborating with Professor Christian Rutz to study the vocal repertoires of two crow species, the Hawaiian crow and the carrion crow.

They aim to analyze the vocalizations of the Hawaiian crow in captive breeding populations to understand how the species’ vocal repertoire has changed over time and support efforts to reintroduce them to the wild. They also investigate the role of acoustic communication in group coordination of carrion crows using field recordings. The study aims to uncover cultural and behavioral complexity that can inform conservation strategies.

TQR reached out to Earth Species Project CEO Katie Zacarian, who told us:

“At ESP we’re inspired by the potential for AI to help us decode non-human communication, with the ultimate goal of using the new knowledge and understanding that results from that to reset our relationship with the rest of nature. This is a really tough problem that will require collaboration across many fields. We work with a wide range of biologists and institutions to gather data. And with some partners we’re helping them design new experiments. Their contributions, often built on many decades of research, are pivotal.”

What can come from the ability to talk to animals and share culture across species? Credit: Image generated using DALL-E.

If humans were able to talk to animals, it could revolutionize our relationship with the natural world.

On one hand, it could transform our understanding of animal intelligence, consciousness, and right to life, potentially leading to a greater understanding of the experiences of species currently used for food production and changes in how they are treated. It may also challenge the anthropocentric worldview that has long dominated human thought.

On the other hand, communicating with animals could offer a unique perspective on the natural world, enriching our understanding and interactions with it. This could lead to breakthroughs in fields such as biology, ecology, and animal behavior, inspiring new ideas for technological innovation and providing new perspectives on the nature of consciousness and intelligence.

Ultimately, the ability to talk with animals has the potential to fundamentally reshape our understanding of the world and our place within it, fostering a deeper sense of interconnectedness between humans and the rest of the living world.

Craving more information? Check out these recommended TQR articles:

- Ancient Civilizations of The Future: Could Technologies for Preserving Individual Memories Define Us In a Million Years?

- Burning Museums and Erasing History: The Societal Need for Memory

- Mummies in 3D: Imaging Technology Opens New Possibilities for Preserving Memory in Archeological Findings

- Baby Yingliang: The Best Dinosaur Embryo Fossil Ever Found Is Remarkably Similar to a Chicken Egg

- The Cold War and Whale Sharks: The Unexpected Outcome of Nuclear Tests

If we can understand more clearly how animals communicate, the ethical question of animal breeding for human consumption, will come to the forefront