Human-like robots blur the lines between artificial intelligence and human cognition, raising both fascination and ethical concerns in society. Image generated using Ideogram AI.

By Mariana Meneses

We have evolved to use our senses to gather information more efficiently about the world.

Inspired by nature, scientists have greatly improved the accuracy and efficiency of human-machine interfaces (HMI) by incorporating sensory inputs in the technology.

These advancements empower humans with unprecedented control over their robots’ movements and hold the potential to revolutionize various industries, particularly those involving human-robot collaboration within hazardous environments. At the same time, however, concerns about biosecurity are also on the rise.

What are some of the most fascinating developments connecting nature to machines? How can we ensure that these technologies are optimized to benefit humans while mitigating safety threats?

In just a few decades, our idea of robots has transformed from clunky machines that perform repetitive tasks to sophisticated, autonomous systems capable of performing complex tasks and interacting with humans. Today, robots work alongside humans in various settings, and are equipped with sensors to perceive and navigate their environments. Photo: DJ Shin

Bio-integrated intelligent sensing systems

In a 2022 scientific paper, Mengwei Liu, from the Shanghai Institute of Microsystem and Information Technology, and co-authors review advancements in bio-integrated intelligent sensing systems (BISS) and their diverse applications. BISS are versatile devices, implantable, attachable, or wearable like watches, and are designed to sense and analyze physiological signals using varied materials and methods.

The authors emphasize its potential in healthcare, enhancing human capabilities, and serving as human-machine interfaces for virtual and augmented reality. BISS can monitor and treat medical conditions, improve diagnosis accuracy, enhance athletic performance, and revolutionize technology interaction. Despite current limitations like sensor accuracy and data security, ongoing BISS progress promises transformative research and development, shaping future technological and interpersonal interactions.

Also aiming to improve accuracy in robotic tasks, in another 2022 study researchers at the University of Washington tested a new way for humans and machines to communicate and work together.

Led by Amber H.Y. Chou, the researchers used a combination of a joystick and muscle sensors to see if it could help people perform better at a task requiring precision. The study shows that integrating multiple motor modalities can enhance the performance of human-machine interfaces, with broad implications for fields such as motor rehabilitation and robotic manipulation. Additionally, the results of this study could inform the design of future human-machine interfaces in a variety of other fields, such as virtual reality, gaming, and military technology.

It is noteworthy that there are ethical considerations surrounding the use of HMIs, particularly in military and surveillance contexts, that must be carefully considered.

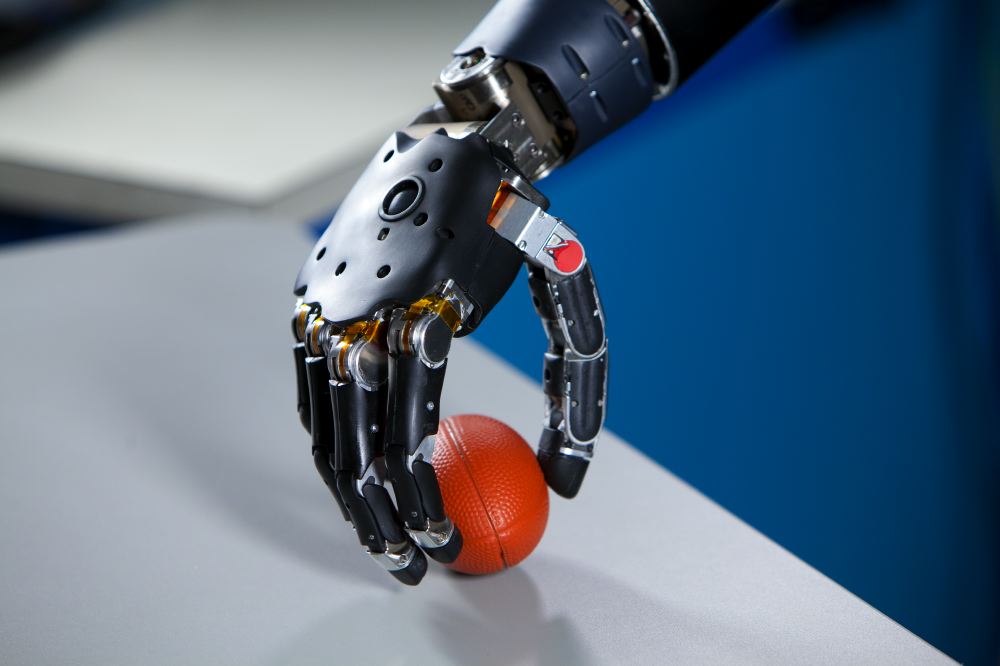

Muscle sensors are a type of bio-integrated sensing system that measure muscle electrical activity, allowing control of devices like robots or prosthetics within the human body.

Brain-controlled prosthetics use brain signals to control limb or computer functions. They are still in development and face challenges, including improving accuracy and user-friendliness, and ensuring user data safety. Nevertheless, they hold potential to revolutionize assistive technology for people with disabilities or injuries. Photo: FDA

Human-machine interfaces

The developments in human-machine interfaces have not only allowed for increased precision in tasks that require careful manipulation but have also allowed for improved control over robots in hazardous environments, such as nuclear facilities, chemical plants, and oil refineries. The robots need to be operated remotely, as it is not safe for humans to be present in these environments. Therefore, researchers have been working on increasing the precision of the remote control that operators have over these robots.

Krzysztof Adam Szczurek and his team have developed a novel multimodal interface to enhance human-robot interaction by incorporating sensory inputs, aiming to enable seamless collaboration between humans and robots. Their system allows multiple operators to control a robot using mixed reality, integrating sight, sound, and touch for an immersive experience akin to playing a video game. Each operator can control different aspects of the robot’s operation, facilitated by a mixed reality display providing real-time environmental feedback.

“Immersion into virtual reality (VR) is a perception of being physically present in a non-physical world. The perception is created by surrounding the user of the VR system in images, sound or other stimuli that provide an engrossing total environment.” (Wikipedia) Credit: Manus VR

Mixed reality merges virtual and real-world environments, allowing users to interact with virtual objects and information as if they were part of the real world. It combines aspects of augmented reality and virtual reality to create an immersive experience that blends the physical and digital worlds.

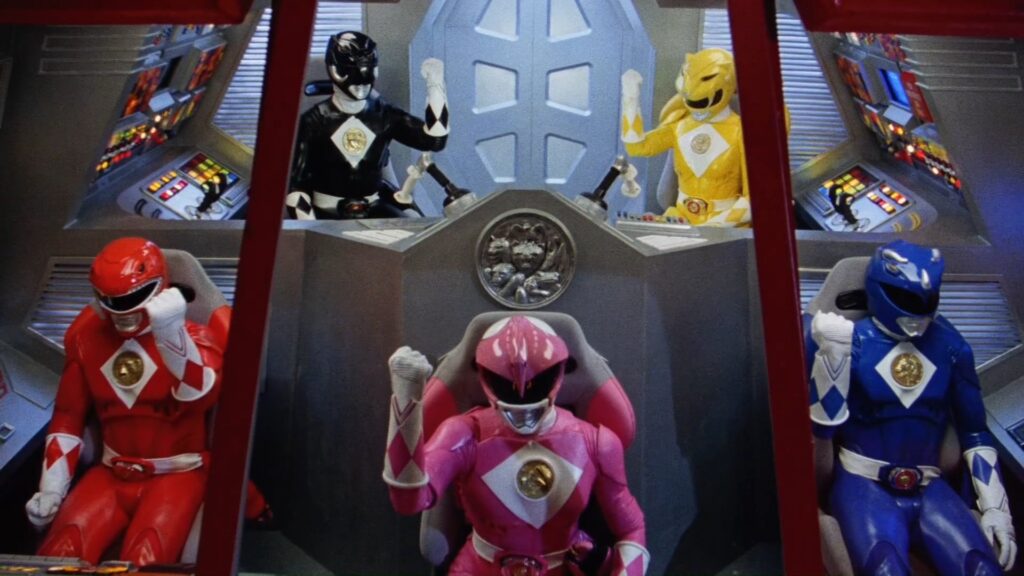

To ensure understanding despite physical distance, the system provides physical sensations to operators when the robot moves or interacts with objects. Tested in a simulated hazardous environment, the system effectively enables remote robot control, reminiscent of Power Rangers coordinating the Megazord.

“Power Rangers” is a popular television series that debuted on August 28, 1993. It features a team of teenagers who are chosen to protect the world from evil forces. Each Power Ranger is associated with a unique “Zord”, a colossal mechanical or bio-mechanical robot. When their individual Zords are inadequate for the task, they can join together into a “Megazord”. In this combined form, each Power Ranger controls a different part of the Megazord, such as an arm, a leg, or the head. This teamwork allows them to effectively battle against larger threats, embodying the show’s themes of unity and cooperation. Image: Reddit

AI breakthroughs

In a previous TQR article, we discussed how recent AI breakthroughs in neural bypasses, brain-computer interfaces, and AI-powered NeuroSkin trousers are revolutionizing paralysis treatment. They offer hope for millions by restoring mobility, communication, and independence, with the potential to significantly improve lives as research and development advance.

In another article, we presented new developments in AI-assisted human reproduction and organ transplantation. We also reported on how machines could benefit – and have been benefiting – from learning the intuition of newborn babies. One article delved into how biology is widely used to advance robotics.

Nature has long motivated and inspired technological advancements. For instance, University of Florida computer scientist and roboticist Eakta Jain has studied horses to understand how their behavior with humans could inspire more trustworthy robots. Her work emphasizes the importance of subtle nonverbal cues in conveying respect and trust, aiming to apply these insights to human-robot interactions to foster greater acceptance and collaboration.

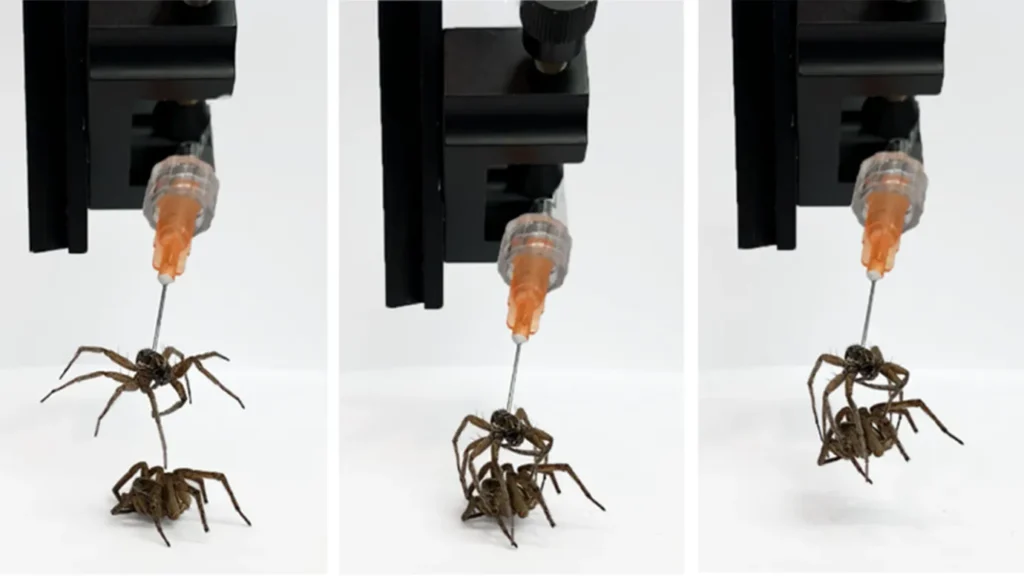

A dead wolf spider becomes a “necrobot”. Credit: Te Faye Yap et al. (2022)

Necrobotics

Another example of the intersection between nature and robotics comes from a 2022 study that used dead spiders’ bodies to create robots. In the field known as “necrobotics,” researchers at Rice University converted the corpses of recently-killed wolf spiders into functional grippers capable of manipulating objects. By harnessing the hydraulic properties of spider legs, the team injected fluid into the cadavers to control their grip, demonstrating the potential for bio-inspired robotic design.

A flower-shaped soft robot, developed by researchers at École Polytechnique Fédérale de Lausanne, offers a less invasive method for monitoring electrical activity in the brain. Inserted through a small hole in the skull, the device unfolds to lie flat on the brain’s surface, allowing for precise monitoring without the need for extensive skull removal. Successful testing on a minipig suggests its potential for human use, with future versions possibly capable of bidirectional sensing and stimulation, opening doors for applications in treating seizures and advancing brain-machine interfaces.

Inspiration found in nature

Another example of inspiration in the shapes of nature can be seen in the square-shaped tail of the seahorse, which researchers have found to offer functional advantages in grasping and armor, compared to cylindrical tails found in other animals.

This unique tail structure, composed of square prisms surrounded by bony plates, provides stiffness, strength, and resilience, making it an intriguing model for biomedicine, robotics, and defense systems. The square design creates more contact points when gripping surfaces, making it potentially useful for building better robots as well as military and medical devices. It highlights the potential of biomimicry in inspiring new engineering applications and understanding evolutionary adaptations.

A seahorse is a unique marine fish known for its upright swimming position and horse-like head. Found in shallow tropical and temperate waters globally, seahorses exhibit distinctive behavior and inhabit diverse habitats such as coral reefs and sea grass beds. (Ocean Action Hub, Image: Florin Dumitrescu)

“Biomimetics or biomimicry is the emulation of models, systems, and elements of nature for the purpose of solving complex human problems.” (Wikipedia) A closely related field, “Bionics or biologically inspired engineering is the application of biological methods and systems found in nature to the study and design engineering systems and modern technology.” (Wikipedia).

A team of U.S. scientists has developed the Bee++, an insect-scale flying robot equipped with four independently-actuated flapping wings, allowing it to fly stably in all directions.

By synthesizing high-performance flight controllers and utilizing lightweight materials, such as carbon fiber and mylar, the Bee++ prototype represents a significant advancement in the development of artificial flying insects. The robot holds promise for various applications, including artificial pollination, search and rescue missions, biological research, and environmental monitoring in hostile environments. The research underscores the importance of precise control mechanisms inspired by insect behavior in achieving stable flight for microscale robots.

The Bee++ prototype, featuring carbon fiber and mylar wings and lightweight actuators, is the first to achieve stable flight in all directions. Credit: Bena et al

Self-improving robotic agents?

Recently, Google’s Deep Mind’s RoboCat team announced the development of a self-improving robotic agent capable of learning and performing a variety of tasks with different robotic arms, significantly reducing the need for human-supervised training.

Utilizing a multimodal model called Gato, RoboCat learns from diverse datasets and autonomously generates additional training data to improve its performance. Through a self-improvement training cycle, RoboCat fine-tunes its skills on new tasks and robotic arms, achieving impressive success rates even on previously untrained tasks. With its ability to rapidly adapt and self-improve, RoboCat represents a significant advancement towards the development of general-purpose robotic agents capable of assisting in various applications.

However, despite the numerous successes in integrating nature and robotics that hold promise for enhancing human capabilities in various ways, there are also concerns regarding the directions we may be taking in the name of ‘progress’.

In a recently published scientific article, Luciano Floridi and Anna C. Nobre, from the Centre for Digital Ethics at Yale University, have argued that we are following a path of “anthropomorphizing machines and computerising minds,” suggesting that the emerging trend of conceptual borrowing between artificial intelligence (AI) and brain and cognitive sciences has led to a blurring of boundaries. They contend that this cross-pollination of technical vocabularies has resulted in computers being described anthropomorphically, akin to computational brains with psychological attributes, while brains and minds are portrayed computationally and informationally, akin to biological computers.

This intermixing, they caution, goes beyond mere metaphorical expressions, and may engender confusion and misleading conceptual assumptions, ultimately influencing the direction of research and its consequences. However, Floridi and Nobre express optimism about the adaptive nature of language and its capacity to shed outdated conceptual baggage as understanding and factual knowledge progress.

Adam Willows argues that technology is not separate from human nature but rather an extension of it. It is a tool that humans use to enhance their lives and extend their capabilities, reflecting their desires and aspirations. Technology shapes and influences human nature, impacting our thinking, behavior, and culture. Image: PxHere

As we navigate the evolving landscape of technology and artificial intelligence, the distinction between using machines for intellectual tasks versus manual labour becomes increasingly important.

While automation has undeniably revolutionized various industries, there’s a need to reflect on the concept of intelligence attributed to machines.

John McCarthy’s insight into his coining the term “artificial intelligence” sheds light on the evolving perceptions of machine capabilities over time. In 1956, the term was chosen for its marketing allure over McCarthy’s preferred “automaton studies,” when the understanding of intelligence in machines differed significantly from today’s assumptions. As we continue to harness the power of automation, it’s crucial to revisit historical perspectives to inform our understanding of intelligence in the context of machines, thereby guiding the direction of technological advancements and their implications for society.

The intertwining of nature and robotics has led to remarkable advancements in human-machine interaction and technological innovation. From bio-integrated sensing systems to brain-controlled prosthetics, researchers continue to push the boundaries of possibility. However, alongside these breakthroughs come ethical considerations and the need for careful navigation to ensure that technological progress aligns with human values and safety.

Despite these challenges, the evolving landscape of robotics offers promising solutions to enhance human capabilities, revolutionize various industries, and address pressing societal needs. As we move forward, fostering responsible innovation and ethical use of technology will be paramount to realizing the full potential of this exciting intersection between nature and robotics.

Craving more information? Check out these recommended TQR articles:

- Biology Delivers Major Advances to Robot Technology

- Human Creativity in the Era of Generative AI

- Beyond the Binary: Can Machines Achieve Conscious Understanding?

- Celebrating Human Creativity Means Guarding Against Stereotyping by AI

- Bodies of the Future: How AI is Assisting Human Reproduction and Organ Transplantation

- Next-Gen Crystal Ball? AI Advances Detection of Childhood Blindness, Dementia, and Cancer

- PLATO and the Quest to Give Machines the Intuition of a Newborn Human

- CRISPR Technology: Editing the Genetic Code, From Plants to Humans